Flink SQL 客户端查询Hive配置及问题解决

作者:快盘下载 人气:前些天发现了一个巨牛的人工智能学习网站,通俗易懂,风趣幽默,忍不住给大家分享一下。点击跳转到网站:https://www.captainai.net/dongkelun

前言

记录博主第一次使用Flink SQL查询Hive的配置以及问题解决过程

博主目前还没有用过Flink,没有写过Flink代码,完全是一个小白。之所以使用Flink,是因为博主目前需要测试使用dolphinscheduler的一些功能,其中包括Flink数据源,这里Flink数据源我们使用的是Kyuubi Flink Engine,且学会、了解了Flink之后对于学习Hudi、Kyuubi也是有帮助的。

版本

flink-1.14.3,这里使用kyuubi1.5.2自带的Flink

环境

HDP环境,Hadoop、Hive等已经安装配置好

配置

HADOOP_CLASSPATH

export HADOOP_CLASSPATH=hadoop classpath

可以添加在比如/ect/profile里,这样等于修改全局的环境,如果想只对Flink生效,可以添加在bin/config.sh文件里

一开始我对hadoop classpath理解错了,以为是自己手动修改成实际的路径,但是hadoop对应的jar包的路径有很多,后来发现它的意思是执行命令hadoop classpath将返回值赋给HADOOP_CLASSPATH

hadoop classpath /usr/hdp/3.1.0.0-78/hadoop/conf:/usr/hdp/3.1.0.0-78/hadoop/lib/*:/usr/hdp/3.1.0.0-78/hadoop/.//*:/usr/hdp/3.1.0.0-78/hadoop-hdfs/./:/usr/hdp/3.1.0.0-78/hadoop-hdfs/lib/*:/usr/hdp/3.1.0.0-78/hadoop-hdfs/.//*:/usr/hdp/3.1.0.0-78/hadoop-mapreduce/lib/*:/usr/hdp/3.1.0.0-78/hadoop-mapreduce/.//*:/usr/hdp/3.1.0.0-78/hadoop-yarn/./:/usr/hdp/3.1.0.0-78/hadoop-yarn/lib/*:/usr/hdp/3.1.0.0-78/hadoop-yarn/.//*:/usr/hdp/3.1.0.0-78/tez/*:/usr/hdp/3.1.0.0-78/tez/lib/*:/usr/hdp/3.1.0.0-78/tez/conf:/usr/hdp/3.1.0.0-78/tez/conf_llap:/usr/hdp/3.1.0.0-78/tez/doc:/usr/hdp/3.1.0.0-78/tez/hadoop-shim-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/hadoop-shim-2.8-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib:/usr/hdp/3.1.0.0-78/tez/man:/usr/hdp/3.1.0.0-78/tez/tez-api-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-common-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-dag-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-examples-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-history-parser-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-javadoc-tools-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-job-analyzer-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-mapreduce-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-protobuf-history-plugin-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-runtime-internals-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-runtime-library-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-tests-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-yarn-timeline-cache-plugin-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-yarn-timeline-history-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-yarn-timeline-history-with-acls-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/tez-yarn-timeline-history-with-fs-0.9.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/ui:/usr/hdp/3.1.0.0-78/tez/lib/async-http-client-1.9.40.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-cli-1.2.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-codec-1.4.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-collections-3.2.2.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-collections4-4.1.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-io-2.4.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-lang-2.6.jar:/usr/hdp/3.1.0.0-78/tez/lib/commons-math3-3.1.1.jar:/usr/hdp/3.1.0.0-78/tez/lib/gcs-connector-1.9.10.3.1.0.0-78-shaded.jar:/usr/hdp/3.1.0.0-78/tez/lib/guava-11.0.2.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-aws-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-azure-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-azure-datalake-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-hdfs-client-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-mapreduce-client-common-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-mapreduce-client-core-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/hadoop-yarn-server-timeline-pluginstorage-3.1.1.3.1.0.0-78.jar:/usr/hdp/3.1.0.0-78/tez/lib/jersey-client-1.19.jar:/usr/hdp/3.1.0.0-78/tez/lib/jersey-json-1.19.jar:/usr/hdp/3.1.0.0-78/tez/lib/jettison-1.3.4.jar:/usr/hdp/3.1.0.0-78/tez/lib/jetty-server-9.3.22.v20171030.jar:/usr/hdp/3.1.0.0-78/tez/lib/jetty-util-9.3.22.v20171030.jar:/usr/hdp/3.1.0.0-78/tez/lib/jsr305-3.0.0.jar:/usr/hdp/3.1.0.0-78/tez/lib/metrics-core-3.1.0.jar:/usr/hdp/3.1.0.0-78/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/3.1.0.0-78/tez/lib/RoaringBitmap-0.4.9.jar:/usr/hdp/3.1.0.0-78/tez/lib/servlet-api-2.5.jar:/usr/hdp/3.1.0.0-78/tez/lib/slf4j-api-1.7.10.jar:/usr/hdp/3.1.0.0-78/tez/lib/tez.tar.gz

jar包

flink-connector-hive 下载地址:https://repo1.maven.org/maven2/org/apache/flink/flink-connector-hive_2.12/1.14.3/,对应flink-connector-hive_2.12-1.14.3.jarhive-exec: 从HIVE_HOME/lib拷贝即可: hive-exec-3.1.0.3.1.0.0-78.jarlibfb303: 从HIVE_HOME/lib拷贝即可:libfb303-0.9.3.jar将这几个包放到

提交模式

Flink具有多种提交方式,比如:常用的local模式,stantalone模式,yarn模式,k8s等。sql client 默认为stantalone模式,我们可以根据自己的需求选择对应的模式

stantalone

这是默认的模式,什么配置参数都不用修改,不过stantalone模式,需要先使用bin/start-cluster.sh命令启动stantalone集群

停止stantalone集群:bin/stop-cluster.sh

yarn-per-job

修改flink-conf.yaml 添加:

execution.target: yarn-per-job

yarn-session

启动yarn-session

bin/yarn-session.sh -nm flink-sql -d

相关参数:

bin/yarn-session.sh -h

Usage:

Optional

-at,--applicationType <arg> Set a custom application type for the application on YARN

-D <property=value> use value for given property

-d,--detached If present, runs the job in detached mode

-h,--help Help for the Yarn session CLI.

-id,--applicationId <arg> Attach to running YARN session

-j,--jar <arg> Path to Flink jar file

-jm,--jobManagerMemory <arg> Memory for JobManager Container with optional unit (default: MB)

-m,--jobmanager <arg> Set to yarn-cluster to use YARN execution mode.

-nl,--nodeLabel <arg> Specify YARN node label for the YARN application

-nm,--name <arg> Set a custom name for the application on YARN

-q,--query Display available YARN resources (memory, cores)

-qu,--queue <arg> Specify YARN queue.

-s,--slots <arg> Number of slots per TaskManager

-t,--ship <arg> Ship files in the specified directory (t for transfer)

-tm,--taskManagerMemory <arg> Memory per TaskManager Container with optional unit (default: MB)

-yd,--yarndetached If present, runs the job in detached mode (deprecated; use non-YARN specific option instead)

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-paths for high availability mode 停止yarn-session

In order to stop Flink gracefully, use the following command: $ echo "stop" | ./bin/yarn-session.sh -id application_1661394335976_0016 If this should not be possible, then you can also kill Flink via YARN's web interface or via: $ yarn application -kill application_1661394335976_0016 Note that killing Flink might not clean up all job artifacts and temporary files.

配置flink-conf.yaml

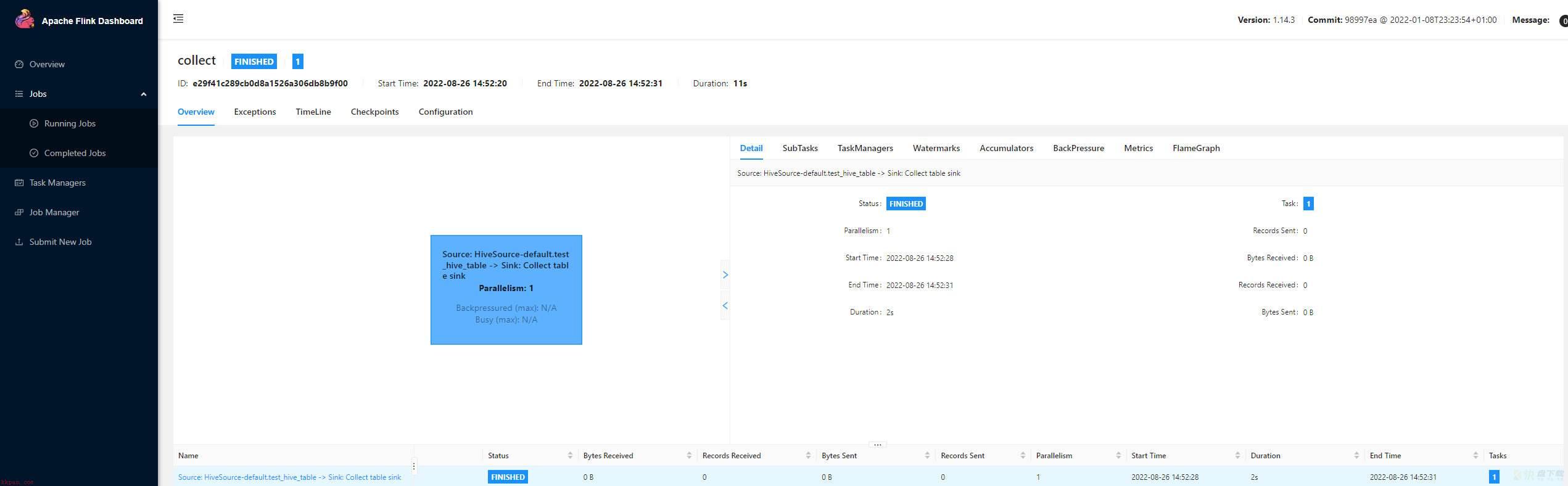

成功启动yarn-session后,可以在yarn ui界面看到 (点击Tracking UI中的 ApplicationMaster,可以进入到flink的管理界面)

除了要修改execution.target为yarn-session外,还要添加yarn.application.id,值为yarn对应的id

execution.target: yarn-session yarn.application.id: application_1661394335976_0013

启动 sql 客户端

bin/sql-client.sh

Setting HBASE_CONF_DIR=/etc/hbase/conf because no HBASE_CONF_DIR was set.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hdp/kyuubi-1.5.2/externals/flink-1.14.3/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.0.0-78/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Command history file path: /root/.flink-sql-history

▒▓██▓██▒

▓████▒▒█▓▒▓███▓▒

▓███▓░░ ▒▒▒▓██▒ ▒

░██▒ ▒▒▓▓█▓▓▒░ ▒████

██▒ ░▒▓███▒ ▒█▒█▒

░▓█ ███ ▓░▒██

▓█ ▒▒▒▒▒▓██▓░▒░▓▓█

█░ █ ▒▒░ ███▓▓█ ▒█▒▒▒

████░ ▒▓█▓ ██▒▒▒ ▓███▒

░▒█▓▓██ ▓█▒ ▓█▒▓██▓ ░█░

▓░▒▓████▒ ██ ▒█ █▓░▒█▒░▒█▒

███▓░██▓ ▓█ █ █▓ ▒▓█▓▓█▒

░██▓ ░█░ █ █▒ ▒█████▓▒ ██▓░▒

███░ ░ █░ ▓ ░█ █████▒░░ ░█░▓ ▓░

██▓█ ▒▒▓▒ ▓███████▓░ ▒█▒ ▒▓ ▓██▓

▒██▓ ▓█ █▓█ ░▒█████▓▓▒░ ██▒▒ █ ▒ ▓█▒

▓█▓ ▓█ ██▓ ░▓▓▓▓▓▓▓▒ ▒██▓ ░█▒

▓█ █ ▓███▓▒░ ░▓▓▓███▓ ░▒░ ▓█

██▓ ██▒ ░▒▓▓███▓▓▓▓▓██████▓▒ ▓███ █

▓███▒ ███ ░▓▓▒░░ ░▓████▓░ ░▒▓▒ █▓

█▓▒▒▓▓██ ░▒▒░░░▒▒▒▒▓██▓░ █▓

██ ▓░▒█ ▓▓▓▓▒░░ ▒█▓ ▒▓▓██▓ ▓▒ ▒▒▓

▓█▓ ▓▒█ █▓░ ░▒▓▓██▒ ░▓█▒ ▒▒▒░▒▒▓█████▒

██░ ▓█▒█▒ ▒▓▓▒ ▓█ █░ ░░░░ ░█▒

▓█ ▒█▓ ░ █░ ▒█ █▓

█▓ ██ █░ ▓▓ ▒█▓▓▓▒█░

█▓ ░▓██░ ▓▒ ▓█▓▒░░░▒▓█░ ▒█

██ ▓█▓░ ▒ ░▒█▒██▒ ▓▓

▓█▒ ▒█▓▒░ ▒▒ █▒█▓▒▒░░▒██

░██▒ ▒▓▓▒ ▓██▓▒█▒ ░▓▓▓▓▒█▓

░▓██▒ ▓░ ▒█▓█ ░░▒▒▒

▒▓▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░▓▓ ▓░▒█░

______ _ _ _ _____ ____ _ _____ _ _ _ BETA

| ____| (_) | | / ____|/ __ | | / ____| (_) | |

| |__ | |_ _ __ | | __ | (___ | | | | | | | | |_ ___ _ __ | |_

| __| | | | '_ | |/ / ___ | | | | | | | | | |/ _ '_ | __|

| | | | | | | | < ____) | |__| | |____ | |____| | | __/ | | | |_

|_| |_|_|_| |_|_|_ |_____/ __________| _____|_|_|___|_| |_|__|

Welcome! Enter 'HELP;' to list all available commands. 'QUIT;' to exit.

Flink SQL> 创建 hive catalog

CREATE CATALOG hive_catalog WITH (

'type' = 'hive',

'default-database' = 'default',

'hive-conf-dir' = '/usr/hdp/3.1.0.0-78/hive/conf'

); Flink SQL> show catalogs; +-----------------+ | catalog name | +-----------------+ | default_catalog | | hive_catalog | +-----------------+ 2 rows in set

切换 catalog

use catalog hive_catalog;

查询Hive表

Flink SQL> show tables; +--------------------------+ | table name | +--------------------------+ | tba | | tbb | | test | | test1 | | test_2 | | test_hive_table | | test_hudi_table_1 | | test_hudi_table_2 | | test_hudi_table_3 | | test_hudi_table_4 | | test_hudi_table_cow_ctas | | test_hudi_table_kyuubi | | test_hudi_table_merge | | test_no_hudi | | test_no_hudi_2 | +--------------------------+ 15 rows in set

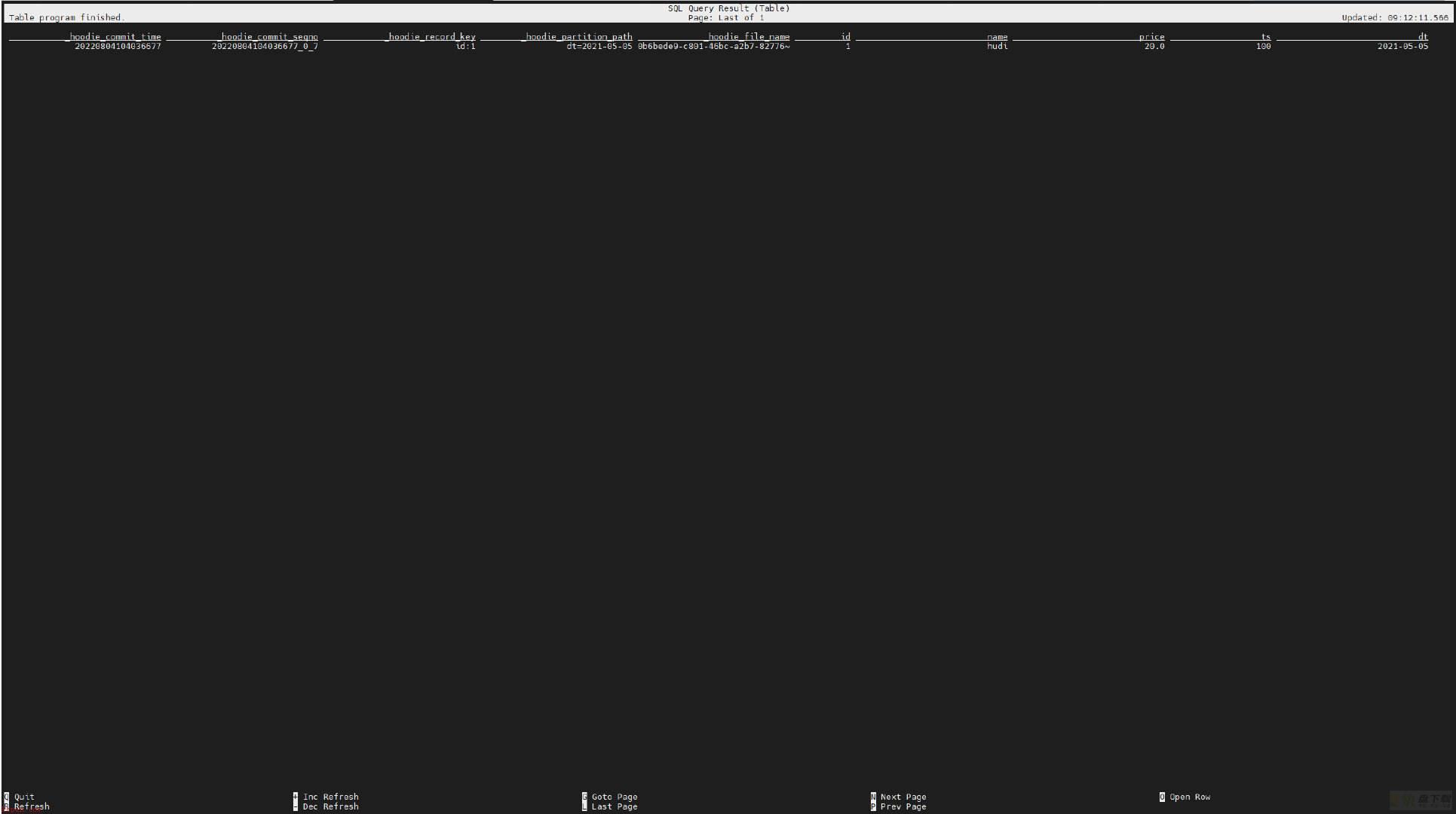

select * from test_hive_table;

2022-08-26 14:52:15,656 WARN org.apache.hadoop.hdfs.shortcircuit.DomainSocketFactory [] - The short-circuit local reads feature cannot be used because libhadoop cannot be loaded.

2022-08-26 14:52:16,021 INFO org.apache.hadoop.hdfs.DFSClient [] - Created token for spark: HDFS_DELEGATION_TOKEN owner=spark/indata-10-110-105-164.indata.com@INDATA.COM, renewer=yarn, realUser=, issueDate=1661496735963, maxDate=1662101535963, sequenceNumber=8068, masterKeyId=85 on ha-hdfs:cluster1

2022-08-26 14:52:16,558 INFO org.apache.hadoop.crypto.key.kms.KMSClientProvider [] - New token created: (Kind: kms-dt, Service: kms://http@indata-10-110-105-163.indata.com:9292/kms, Ident: (kms-dt owner=spark, renewer=yarn, realUser=, issueDate=1661496736233, maxDate=1662101536233, sequenceNumber=1275, masterKeyId=12))

2022-08-26 14:52:16,665 INFO org.apache.hadoop.mapreduce.security.TokenCache [] - Got dt for hdfs://cluster1; Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:cluster1, Ident: (token for spark: HDFS_DELEGATION_TOKEN owner=spark/indata-10-110-105-164.indata.com@INDATA.COM, renewer=yarn, realUser=, issueDate=1661496735963, maxDate=1662101535963, sequenceNumber=8068, masterKeyId=85)

2022-08-26 14:52:16,666 INFO org.apache.hadoop.mapreduce.security.TokenCache [] - Got dt for hdfs://cluster1; Kind: kms-dt, Service: kms://http@indata-10-110-105-162.indata.com;indata-10-110-105-163.indata.com:9292/kms, Ident: (kms-dt owner=spark, renewer=yarn, realUser=, issueDate=1661496736233, maxDate=1662101536233, sequenceNumber=1275, masterKeyId=12)

2022-08-26 14:52:16,704 INFO org.apache.hadoop.mapred.FileInputFormat [] - Total input files to process : 1

2022-08-26 14:52:17,593 WARN org.apache.flink.yarn.configuration.YarnLogConfigUtil [] - The configuration directory ('/opt/hdp/kyuubi-1.5.2/externals/flink-1.14.3/conf') already contains a LOG4J config file.If you want to use logback, then please delete or rename the log configuration file.

2022-08-26 14:52:17,806 INFO org.apache.flink.yarn.YarnClusterDescriptor [] - No path for the flink jar passed. Using the location of class org.apache.flink.yarn.YarnClusterDescriptor to locate the jar

2022-08-26 14:52:17,808 WARN org.apache.flink.yarn.YarnClusterDescriptor [] - Neither the HADOOP_CONF_DIR nor the YARN_CONF_DIR environment variable is set.The Flink YARN Client needs one of these to be set to properly load the Hadoop configuration for accessing YARN.

2022-08-26 14:52:17,818 INFO org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider [] - Failing over to rm2

2022-08-26 14:52:17,883 INFO org.apache.flink.yarn.YarnClusterDescriptor [] - Found Web Interface indata-10-110-105-162.indata.com:41651 of application 'application_1661394335976_0016'.

[INFO] Result retrieval cancelled.

如果是yarn-session模式,我们可以在flink的管理界面看到对应的job信息:

创建Hive表

set table.sql-dialect = hive; #使用hive方言 CREATE TABLE test_hive_table_flink(id int,name string);

insert

insert into test_hive_table_flink values(1,'flink');

select * from test_hive_table_flink;

id name

1 flink hive 查看表结构

show create table test_hive_table_flink; +----------------------------------------------------+ | createtab_stmt | +----------------------------------------------------+ | CREATE TABLE `test_hive_table_flink`( | | `id` int, | | `name` string) | | ROW FORMAT SERDE | | 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' | | STORED AS INPUTFORMAT | | 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' | | OUTPUTFORMAT | | 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat' | | LOCATION | | 'hdfs://cluster1/warehouse/tablespace/managed/hive/test_hive_table_flink' | | TBLPROPERTIES ( | | 'bucketing_version'='2', | | 'transient_lastDdlTime'='1661499551') | +----------------------------------------------------+

drop 表

drop table test_hive_table_flink;

问题解决

记录问题解决过程

缺hadoop相关包

创建Hive catalog时

[ERROR] Could not execute SQL statement. Reason: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FSDataInputStream

解决方法:export HADOOP_CLASSPATH=hadoop classpath

缺Hive相关包

[ERROR] Could not execute SQL statement. Reason: java.lang.ClassNotFoundException: org.apache.hive.common.util.HiveVersionInfo

解决方法:hive-exec: 从$HIVE_HOME/lib拷贝即可: hive-exec-3.1.0.3.1.0.0-78.jar

[ERROR] Could not execute SQL statement. Reason: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

这个异常,在sql客户度看不到详细的信息,不知道缺哪个包

解决方法,查看日志:log/flink-root-sql-client-*.log,找到对应异常

Caused by: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

at org.apache.flink.table.catalog.hive.client.HiveShimV310.getHiveMetastoreClient(HiveShimV310.java:114) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:277) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:78) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:68) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:32) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:296) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:195) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1297) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1122) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeOperation$3(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.context.ExecutionContext.wrapClassLoader(ExecutionContext.java:88) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

... 11 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_181]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_181]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_181]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_181]

at org.apache.flink.table.catalog.hive.client.HiveShimV310.getHiveMetastoreClient(HiveShimV310.java:112) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:277) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:78) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:68) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:32) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:296) ~[flink-connector-hive_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:195) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1297) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1122) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeOperation$3(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.context.ExecutionContext.wrapClassLoader(ExecutionContext.java:88) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

... 11 more

Caused by: java.lang.NoClassDefFoundError: com/facebook/fb303/FacebookService$Iface 根据 com/facebook/fb303/FacebookService$Iface 查找对应的jar包:

libfb303: 从$HIVE_HOME/lib拷贝即可:libfb303-0.9.3.jar,参考:https://blog.csdn.net/skdtr/article/details/123513628

yarn-session没有配置applicationId

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.TableException: Failed to execute sql

org.apache.flink.table.client.gateway.SqlExecutionException: Could not execute SQL statement.

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:211) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeQuery(LocalExecutor.java:231) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.cli.CliClient.callSelect(CliClient.java:532) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.cli.CliClient.callOperation(CliClient.java:423) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.cli.CliClient.lambda$executeStatement$1(CliClient.java:332) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at java.util.Optional.ifPresent(Optional.java:159) ~[?:1.8.0_181]

at org.apache.flink.table.client.cli.CliClient.executeStatement(CliClient.java:325) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.cli.CliClient.executeInteractive(CliClient.java:297) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.cli.CliClient.executeInInteractiveMode(CliClient.java:221) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.SqlClient.openCli(SqlClient.java:151) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:95) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.SqlClient.startClient(SqlClient.java:187) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:161) [flink-sql-client_2.12-1.14.3.jar:1.14.3]

Caused by: org.apache.flink.table.api.TableException: Failed to execute sql

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeQueryOperation(TableEnvironmentImpl.java:828) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1274) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeOperation$3(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.context.ExecutionContext.wrapClassLoader(ExecutionContext.java:88) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

... 12 more

Caused by: java.lang.IllegalStateException

at org.apache.flink.util.Preconditions.checkState(Preconditions.java:177) ~[flink-dist_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.client.deployment.executors.AbstractSessionClusterExecutor.execute(AbstractSessionClusterExecutor.java:72) ~[flink-dist_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.executeAsync(StreamExecutionEnvironment.java:2042) ~[flink-dist_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.planner.delegation.DefaultExecutor.executeAsync(DefaultExecutor.java:95) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeQueryOperation(TableEnvironmentImpl.java:811) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1274) ~[flink-table_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeOperation$3(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.context.ExecutionContext.wrapClassLoader(ExecutionContext.java:88) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:209) ~[flink-sql-client_2.12-1.14.3.jar:1.14.3]

... 12 more 根据异常信息无法判断原因,查找yanr-session相关资料,发现缺少配置:

yarn.application.id: application_1661394335976_0013

yarn-session缺少MapReduce jar包

[ERROR] Could not execute SQL statement. Reason: java.lang.ClassNotFoundException: org.apache.hadoop.mapred.JobConf

具体表现为yarn-session能够启动成功,但是在执行sql时报上面的错误,所以一开始并不会想到之和yarn-session服务有关,因为在其他模式通过设置了HADOOP_CLASSPATH之后是可以正常执行查询sql的,最后才想到是不是和yarn-session服务有关

解决方法:

将 hadoop-mapreduce-client-core-3.1.1.3.1.0.0-78.jar 拷贝到 flink/lib目录下,并重启yarn-session,这里注意重启yarn-session后需要同步更新yarn.application.id的值,然后验证sql果然没有问题了

缺antlr-runtime包

具体是使用hive 方言建表时:

Exception in thread "main" org.apache.flink.table.client.SqlClientException: Unexpected exception. This is a bug. Please consider filing an issue.

at org.apache.flink.table.client.SqlClient.startClient(SqlClient.java:201)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:161)

Caused by: java.lang.NoClassDefFoundError: org/antlr/runtime/tree/CommonTree

at org.apache.flink.table.planner.delegation.hive.HiveParser.processCmd(HiveParser.java:219)

at org.apache.flink.table.planner.delegation.hive.HiveParser.parse(HiveParser.java:208)

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$parseStatement$1(LocalExecutor.java:172)

at org.apache.flink.table.client.gateway.context.ExecutionContext.wrapClassLoader(ExecutionContext.java:88)

at org.apache.flink.table.client.gateway.local.LocalExecutor.parseStatement(LocalExecutor.java:172)

at org.apache.flink.table.client.cli.CliClient.parseCommand(CliClient.java:396)

at org.apache.flink.table.client.cli.CliClient.executeStatement(CliClient.java:324)

at org.apache.flink.table.client.cli.CliClient.executeInteractive(CliClient.java:297)

at org.apache.flink.table.client.cli.CliClient.executeInInteractiveMode(CliClient.java:221)

at org.apache.flink.table.client.SqlClient.openCli(SqlClient.java:151)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:95)

at org.apache.flink.table.client.SqlClient.startClient(SqlClient.java:187)

... 1 more

Caused by: java.lang.ClassNotFoundException: org.antlr.runtime.tree.CommonTree

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 13 more 解决方法: 将$HIVE_HOME/lib下的antlr-runtime-3.5.2.jar拷贝至flink/lib

加载全部内容