Centos7安装OpenStack-Rocky版本和openstack基本使用

作者:快盘下载 人气:28文章目录

环境;设置主机名验证配置域名和IP映射关闭防火墙和SeLinux时间同步配置OpenStack-rocky的yum源文件安装centos-release-openstack-rocky安装openstack客户端安装数据库mariadb启动数据库验证 安装消息队列rabbitMQ安装调整缓存服务安装etcd服务安装keystone认证服务安装包修改keystone配置文件同步数据库初始化Fernet Key库创建认证实体服务配置httpd服务启动httpd服务创建service项目 glance安装数据库建个表在keystone上面注册glance安装包同步glance数据库服务启动 安装nova计算服务在keystone上面注册nova服务placement用户安装软件包修改配置文件同步nova数据计算节点安装包查看该计算节点是否支持虚拟化将计算节点增加到cell库 安装Neutron网络服务创建neutron认证服务 安装neutron网络组件修改配置文件二创建网络插件链接同步数据库启动服务和开机启动计算节点安装neutron组件修改配置文件一修改配置文件二 设置启动与自启动检查验证 安装dashboard面板重启服务检查dashboard可用 参考文章环境;

一台控制节点两台计算节点的名称和ip地址;

版本centos7.9manager;controller;192.168.80.143master;compute;192.168.80.144worker;compute)192.168.80.145设置主机名

#控制节点hostnamectl set-hostname manager.node #计算节点1 hostnamectl set-hostname master.node #计算节点2 hostnamectl set-hostname worker.node

验证

hostnamectl

配置域名和IP映射

编辑/etc/hosts文件

cat >> /etc/hosts <<EOF192.168.80.143 manager.node 192.168.80.144 master.node 192.168.80.145 worker.node EOF

域名解析

cat >> /etc/resolv.conf <<EOFnameserver 8.8.8.8 nameserver 8.8.4.4 EOF

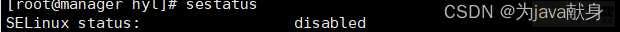

关闭防火墙和SeLinux

systemctl stop firewalldsystemctl disable firewalld.service setenforce 0 sed -i ;s/SELINUX=enforcing/SELINUX=disabled/g; /etc/selinux/config

使用sestatus查看selinux状态

时间同步

manager对所有节点免密登陆

只需manager节点执行

#一直回车就可以ssh-keygen -t rsa

分别分发到各个节点

ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.80.143ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.80.144 ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.80.145

配置OpenStack-rocky的yum源文件

官网是yum安装centos-release-openstack-rocky;用的是国外的源;会比较慢;手动配置阿里的源

cat <<EOF > /etc/yum.repos.d/openstack.repo[openstack-rocky] name=openstack-rocky baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-rocky/ enabled=1 gpgcheck=0 [qume-kvm] name=qemu-kvm baseurl= https://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/ enabled=1 gpgcheck=0 EOF

可在manager节点创建后将该文件分发到所有其它节点

#发送给计算节点1scp /etc/yum.repos.d/openstack.repo master.node:/etc/yum.repos.d/ #发送给计算节点2 scp /etc/yum.repos.d/openstack.repo worker.node:/etc/yum.repos.d/

安装centos-release-openstack-rocky

这个安装需要centos系统中自带的CentOS-Base.repo仓库

yum install centos-release-openstack-rocky

安装openstack客户端

yum install -y python-openstackclientyum install -y openstack-selinux

安装数据库mariadb

只需要在manager节点操作

yum install -y mariadb mariadb-server python2-Pymysql

cat <<EOF > /etc/my.cnf.d/openstack.cnf[mysqld] bind-address = 192.168.80.143 default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8 EOF

启动数据库

systemctl start mariadbsystemctl status mariadb systemctl enable mariadb

设置数据库密码

#我设置的密码为123456 其他选项根据实际情况选择mysql_secure_installation

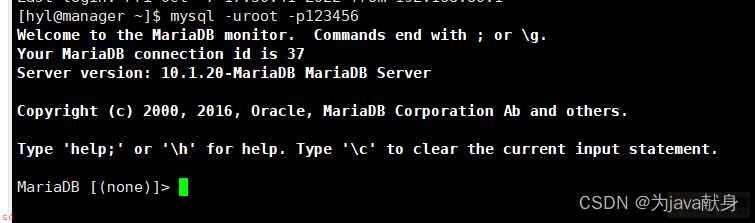

验证

安装消息队列rabbitMQ

在manager节点执行

yum -y install rabbitmq-server

启动消息队列服务

systemctl start rabbitmq-serversystemctl status rabbitmq-server systemctl enable rabbitmq-server

添加openstack用户设置openstack用户最高权限

rabbitmqctl add_user openstack 123456#设置权限 rabbitmqctl set_permissions openstack ;.*; ;.*; ;.*; rabbitmqctl set_permissions -p ;/; openstack ;.*; ;.*; ;.*;

启动web管理

[root;manager hyl]# rabbitmq-plugins list Configured: E = explicitly enabled; e = implicitly enabled | Status: * = running on rabbit;manager |/ [e*] amqp_client 3.6.16 [e*] cowboy 1.0.4 [e*] cowlib 1.0.2 [ ] rabbitmq_amqp1_0 3.6.16 [ ] rabbitmq_auth_backend_ldap 3.6.16 [ ] rabbitmq_auth_mechanism_ssl 3.6.16 [ ] rabbitmq_consistent_hash_exchange 3.6.16 [ ] rabbitmq_event_exchange 3.6.16 [ ] rabbitmq_federation 3.6.16 [ ] rabbitmq_federation_management 3.6.16 [ ] rabbitmq_jms_topic_exchange 3.6.16 [E*] rabbitmq_management 3.6.16 [e*] rabbitmq_management_agent 3.6.16 [ ] rabbitmq_management_visualiser 3.6.16 [ ] rabbitmq_mqtt 3.6.16 [ ] rabbitmq_random_exchange 3.6.16 [ ] rabbitmq_recent_history_exchange 3.6.16 [ ] rabbitmq_sharding 3.6.16 [ ] rabbitmq_shovel 3.6.16 [ ] rabbitmq_shovel_management 3.6.16 [ ] rabbitmq_stomp 3.6.16 [ ] rabbitmq_top 3.6.16 [ ] rabbitmq_tracing 3.6.16 [ ] rabbitmq_trust_store 3.6.16 [e*] rabbitmq_web_dispatch 3.6.16 [ ] rabbitmq_web_mqtt 3.6.16 [ ] rabbitmq_web_mqtt_examples 3.6.16 [ ] rabbitmq_web_stomp 3.6.16 [ ] rabbitmq_web_stomp_examples 3.6.16 [ ] sockjs 0.3.4

rabbitmq-plugins enable rabbitmq_management

systemctl restart rabbitmq-server.service

rabbitmq-plugins list

浏览器地址访问;http://192.168.80.143:15672/

安装调整缓存服务

manager节点执行

yum -y install memcached yum -y install python-memcached

修改配置文件;在最后一行加上管理节点主机名

[root;manager sysconfig]# vim /etc/sysconfig/memcached PORT=;11211; USER=;memcached; MAXCONN=;1024; CACHESIZE=;64; OPTIONS=;-l 127.0.0.1,::1,manager.node;

设置开机启动

systemctl start memcachedsystemctl status memcached

安装etcd服务

manager节点执行

vim /etc/etcd/etcd.conf

#[Member]#ETCD_CORS=;; ETCD_DATA_DIR=;/var/lib/etcd/default.etcd; #ETCD_WAL_DIR=;; ETCD_LISTEN_PEER_URLS=;http://192.168.80.143:2380; ETCD_LISTEN_CLIENT_URLS=;http://192.168.80.143:2379; #ETCD_MAX_SNAPSHOTS=;5; #ETCD_MAX_WALS=;5; ETCD_NAME=;manager.node; #ETCD_SNAPSHOT_COUNT=;100000; #ETCD_HEARTBEAT_INTERVAL=;100; #ETCD_ELECTION_TIMEOUT=;1000; #ETCD_QUOTA_BACKEND_BYTES=;0; #ETCD_MAX_REQUEST_BYTES=;1572864; #ETCD_GRPC_KEEPALIVE_MIN_TIME=;5s; #ETCD_GRPC_KEEPALIVE_INTERVAL=;2h0m0s; #ETCD_GRPC_KEEPALIVE_TIMEOUT=;20s; # #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS=;http://192.168.80.143:2380; ETCD_ADVERTISE_CLIENT_URLS=;http://192.168.80.143:2379; #ETCD_DISCOVERY=;; #ETCD_DISCOVERY_FALLBACK=;proxy; #ETCD_DISCOVERY_PROXY=;; #ETCD_DISCOVERY_SRV=;; ETCD_INITIAL_CLUSTER=;manager.node=http://192.168.80.143:2380; ETCD_INITIAL_CLUSTER_TOKEN=;etcd-cluster-01; ETCD_INITIAL_CLUSTER_STATE=;new; ETCD_STRICT_RECONFIG_CHECK=;true; ETCD_ENABLE_V2=;true;

设置开机启动

systemctl start etcdsystemctl status etcd

安装keystone认证服务

以下都只在manager节点的操作

mysql -u root -p123456#创建数据库 CREATE DATABASE keystone; GRANT ALL PRIVILEGES ON keystone.* TO ;keystone;;;localhost; IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON keystone.* TO ;keystone;;% IDENTIFIED BY ;123456;; flush privileges;

安装包

yum install openstack-keystone httpd mod_wsgiyum install openstack-utils #快速修改配置文件工具

修改keystone配置文件

进入/etc/keystone目录;编辑keystone.conf文件

修改两处

#快速修改openstack-config --set /etc/keystone/keystone.conf database connection mysql;pymysql://keystone:123456;manager.node/keystone openstack-config --set /etc/keystone/keystone.conf token provider fernet # 查看修改内容 egrep -v ;^#|^$; /etc/keystone/keystone.conf

同步数据库

su -s /bin/sh -c ;keystone-manage db_sync; keystone

进入数据库查看同步结果;共44张表

MariaDB [keystone]> use keystone;Database changed MariaDB [keystone]> show tables; ;-----------------------------; | Tables_in_keystone | ;-----------------------------; | access_token | | application_credential | | application_credential_role | ..... | user | | user_group_membership | | user_option | | whitelisted_config | ;-----------------------------; 44 rows in set (0.00 sec)

初始化Fernet Key库

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystonekeystone-manage credential_setup --keystone-user keystone --keystone-group keystone

创建认证实体服务

# --bootstrap-password ks123456 可以自己设置# --bootstrap-admin-url http://manager.node:5000/v3/ 替换成主机域名 # --bootstrap-internal-url http://manager.node:5000/v3/ # --bootstrap-public-url http://manager.node:5000/v3/ # --bootstrap-region-id RegionOne keystone-manage bootstrap --bootstrap-password ks123456 --bootstrap-admin-url http://manager.node:5000/v3/ --bootstrap-internal-url http://manager.node:5000/v3/ --bootstrap-public-url http://manager.node:5000/v3/ --bootstrap-region-id RegionOne

配置httpd服务

进入/etc/httpd/conf目录;编辑httpd.conf文件

vim /etc/httpd/conf/httpd.conf ;95 #编辑第95行#修改内容 ServerName manager.node

检查

cat /etc/httpd/conf/httpd.conf |grep ServerName

保存退出;建立文件链接

ll /usr/share/keystone/ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ ll /etc/httpd/conf.d/

启动httpd服务

systemctl start httpdsystemctl enable httpd

编写环境变量脚本admin-openrc

touch admin-openrc.sh

vim admin-openrc.sh

export OS_PROJECT_DOMAIN_NAME=Defaultexport OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=ks123456 export OS_AUTH_URL=http://manager.node:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2

source admin-openrc.shopenstack token issue

创建service项目

openstack project create --domain default --description ;Service Project; service

验证

openstack user listopenstack token issue

glance安装

数据库建个表

mysql -uroot -pCREATE DATABASE glance; GRANT ALL PRIVILEGES ON glance.* TO ;glance;;;localhost; IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON glance.* TO ;glance;;% IDENTIFIED BY ;123456;; flush privileges;

在keystone上面注册glance

#根据自己路径source /home/hyl/admin-openrc.sh openstack user create --domain default --password-prompt glance #自己设定密码 gl123456

在keystone上将glance用户添加为service项目的admin角色(权限)

openstack role add --project service --user glance admin

创建glance镜像服务的实体

[root;manager hyl]# openstack service create --name glance --description ;OpenStack Image; image;-------------;----------------------------------; | Field | Value | ;-------------;----------------------------------; | description | OpenStack Image | | enabled | True | | id | cfe0909086ae40ddb851033df69ae111 | | name | glance | | type | image | ;-------------;----------------------------------;

openstack service list

创建镜像服务的 API 端点;endpoint;

penstack endpoint create --region RegionOne image public http://manager.node:9292openstack endpoint create --region RegionOne image internal http://manager.node:9292 openstack endpoint create --region RegionOne image admin http://manager.node:9292 openstack endpoint list

在keystone上注册完成

安装包

yum install openstack-glance

修改配置文件

进入/etc/glance目录

修改glance-api.conf文件

这个文件一共改4个地方

# 快速修改openstack-config --set /etc/glance/glance-api.conf database connection mysql;pymysql://glance:123456;manager.node/glance openstack-config --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://manager.node:5000 openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://manager.node:5000 openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers manager.node:11211 openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Default openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Default openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password gl123456 openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http openstack-config --set /etc/glance/glance-api.conf glance_store default_store file openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

修改glance-registry.conf文件

openstack-config --set /etc/glance/glance-registry.conf database connection mysql;pymysql://glance:123456;manager.node/glanceopenstack-config --set /etc/glance/glance-registry.conf keystone_authtoken www_authenticate_uri http://manager.node:5000 openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://manager.node:5000 openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers manager.node:11211 openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name Default openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name Default openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password gl123456 openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

grep ;^[a-z]; /etc/glance/glance-registry.conf

同步glance数据库

su -s /bin/sh -c ;glance-manage db_sync; glance

查看数据库;一共增加15张表

use glance;show tables;

服务启动

systemctl start openstack-glance-apisystemctl enable openstack-glance-api systemctl start openstack-glance-registry systemctl enable openstack-glance-registry

测试服务

先查看镜像;一开始没有为空

openstack image list

从网上下载一个镜像cirros-0.3.4-x86_64-disk.img

下载完成后上传到manager节点/opt目录下

root;manager opt]# openstack image create ;cirros-abc; --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public;------------------;--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------; | Field | Value | ;------------------;--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------; | checksum | ee1eca47dc88f4879d8a229cc70a07c6 | | container_format | bare | | created_at | 2022-10-07T11:13:14Z | | disk_format | qcow2 | | file | /v2/images/ee999785-2538-4c0c-882b-6ed5e9d2218e/file | | id | ee999785-2538-4c0c-882b-6ed5e9d2218e | | min_disk | 0 | | min_ram | 0 | | name | cirros-abc | | owner | ad2491b6c8b14dd3af6f82a2bb1897ff | | properties | os_hash_algo=;sha512;, os_hash_value=;1b03ca1bc3fafe448b90583c12f367949f8b0e665685979d95b004e48574b953316799e23240f4f739d1b5eb4c4ca24d38fdc6f4f9d8247a2bc64db25d6bbdb2;, os_hidden=;False; | | protected | False | | schema | /v2/schemas/image | | size | 13287936 | | status | active | | tags | | | updated_at | 2022-10-07T11:13:15Z | | virtual_size | None | | visibility | public | ;------------------;--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------;

再次查看

[root;manager opt]# openstack image list;--------------------------------------;------------;--------; | ID | Name | Status | ;--------------------------------------;------------;--------; | ee999785-2538-4c0c-882b-6ed5e9d2218e | cirros-abc | active | | 7ea931dd-d23e-4967-b637-ffe6b921869e | cirros-abc | active |

glance服务安装测试完成。

安装nova计算服务

以下在manager节点;controller;

需要创建4个数据库

#登录数据库CREATE DATABASE nova_api; CREATE DATABASE nova; CREATE DATABASE nova_cell0; .CREATE DATABASE placement;

赋权操作

GRANT ALL PRIVILEGES ON nova_api.* TO ;nova;;;localhost; IDENTIFIED BY ;123456;;GRANT ALL PRIVILEGES ON nova_api.* TO ;nova;;% IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON nova.* TO ;nova;;;localhost; IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON nova.* TO ;nova;;% IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON nova_cell0.* TO ;nova;;;localhost; IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON nova_cell0.* TO ;nova;;% IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON placement.* TO ;placement;;;localhost; IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON placement.* TO ;placement;;% IDENTIFIED BY ;123456;; flush privileges;

在keystone上面注册nova服务

nova用户

#根据自身情况source /home/hyl/admin-openrc.sh openstack user create --domain default --password-prompt nova #自己设定 nv123456

将nova用户添加进service项目并配置为admin角色

openstack role add --project service --user nova admin

创建nova计算服务的实体

openstack service create --name nova --description ;OpenStack Compute; compute

创建计算服务的API端点;endpoint;

openstack endpoint create --region RegionOne compute public http://manager.node:8774/v2.1openstack endpoint create --region RegionOne compute internal http://manager.node:8774/v2.1 openstack endpoint create --region RegionOne compute admin http://manager.node:8774/v2.1 openstack endpoint list

placement用户

openstack user create --domain default --password-prompt placement3#自己定义 pm123456

将placement用户添加进service项目并配置为admin角色

openstack role add --project service --user placement admin

创建Placement API 服务实体

openstack service create --name placement --description ;Placement API; placement

创建placement服务的API端点;endpoint;

openstack endpoint create --region RegionOne placement public http://manager.node:8778openstack endpoint create --region RegionOne placement internal http://manager.node:8778 openstack endpoint create --region RegionOne placement admin http://manager.node:8778 openstack endpoint list

安装软件包

yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

修改配置文件

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata#修改成自己的ip openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.80.143 openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:123456;manager.node openstack-config --set /etc/nova/nova.conf api_database connection mysql;pymysql://nova:123456;manager.node/nova_api openstack-config --set /etc/nova/nova.conf database connection mysql;pymysql://nova:123456;manager.node/nova openstack-config --set /etc/nova/nova.conf placement_database connection mysql;pymysql://placement:123456;manager.node/placement openstack-config --set /etc/nova/nova.conf api auth_strategy keystone openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://manager.node:5000/v3 openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers manager.node:11211 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova openstack-config --set /etc/nova/nova.conf keystone_authtoken password nv123456 openstack-config --set /etc/nova/nova.conf vnc enabled true openstack-config --set /etc/nova/nova.conf vnc server_listen ;$my_ip; openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ;$my_ip; openstack-config --set /etc/nova/nova.conf glance api_servers http://manager.node:9292 openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp openstack-config --set /etc/nova/nova.conf placement region_name RegionOne openstack-config --set /etc/nova/nova.conf placement project_domain_name Default openstack-config --set /etc/nova/nova.conf placement project_name service openstack-config --set /etc/nova/nova.conf placement auth_type password openstack-config --set /etc/nova/nova.conf placement user_domain_name Default openstack-config --set /etc/nova/nova.conf placement auth_url http://manager.node:5000/v3 openstack-config --set /etc/nova/nova.conf placement username placement openstack-config --set /etc/nova/nova.conf placement password pm123456 openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 300

检验

grep ;^[a-z]; /etc/nova/nova.conf

nova.conf文件配置完成;除了网络;。

进入/etc/httpd/conf.d/目录;修改00-nova-placement-api.conf文件

# 增加下面的内容<Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory>

重启httpd服务

systemctl restart httpd

同步nova数据

初始化nova-api和placement数据库

su -s /bin/sh -c ;nova-manage api_db sync; nova

查看数据库

show databases;

nova_api库和placement库均有32张表

use nova_api;show tables; use placement; show tables;

注册cell0数据库

su -s /bin/sh -c ;nova-manage cell_v2 map_cell0; nova

创建cell1单元

su -s /bin/sh -c ;nova-manage cell_v2 create_cell --name=cell1 --verbose; nova

初始化nova数据库

su -s /bin/sh -c ;nova-manage db sync; nova

查看数据库;nova_cell0库和nova库均有110张表

use nova_cell0;show tables; use nova; show tables;

查验nova cell0 和 cell1 是否注册成功

su -s /bin/sh -c ;nova-manage cell_v2 list_cells; nova[root;manager opt]# su -s /bin/sh -c ;nova-manage cell_v2 list_cells; nova ;-------;--------------------------------------;--------------------------------------;---------------------------------------------------;----------; | Name | UUID | Transport URL | Database Connection | Disabled | ;-------;--------------------------------------;--------------------------------------;---------------------------------------------------;----------; | cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql;pymysql://nova:****;manager.node/nova_cell0 | False | | cell1 | 9ca619eb-b5bc-4359-baf7-0ba1f7d3bdf1 | rabbit://openstack:****;manager.node | mysql;pymysql://nova:****;manager.node/nova | False | ;-------;--------------------------------------;--------------------------------------;---------------------------------------------------;----------;

启动服务并设置为开机启动;5个服务

systemctl start openstack-nova-api systemctl enable openstack-nova-api systemctl start openstack-nova-consoleauth systemctl enable openstack-nova-consoleauth systemctl start openstack-nova-scheduler systemctl enable openstack-nova-scheduler systemctl start openstack-nova-conductor systemctl enable openstack-nova-conductor systemctl start openstack-nova-novncproxy systemctl enable openstack-nova-novncproxy

以下在计算节点;compute;执行

这里以master.node为例

计算节点安装包

yum install openstack-nova-compute

计算节点修改配置文件

进入/etc/nova/目录;修改nova.conf文件

#修改成自己的ipopenstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.80.144 openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:123456;manager.node openstack-config --set /etc/nova/nova.conf api auth_strategy keystone openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://manager.node:5000/v3 openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers manager.node:11211 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova openstack-config --set /etc/nova/nova.conf keystone_authtoken password nv123456 openstack-config --set /etc/nova/nova.conf vnc enabled true openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0 openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ;$my_ip; openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://manager.node:6080/vnc_auto.html openstack-config --set /etc/nova/nova.conf glance api_servers http://manager.node:9292 openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp openstack-config --set /etc/nova/nova.conf placement region_name RegionOne openstack-config --set /etc/nova/nova.conf placement project_domain_name Default openstack-config --set /etc/nova/nova.conf placement project_name service openstack-config --set /etc/nova/nova.conf placement auth_type password openstack-config --set /etc/nova/nova.conf placement user_domain_name Default openstack-config --set /etc/nova/nova.conf placement auth_url http://manager.node:5000/v3 openstack-config --set /etc/nova/nova.conf placement username placement openstack-config --set /etc/nova/nova.conf placement password pm123456

验证 grep ;^[a-z]; /etc/nova/nova.conf

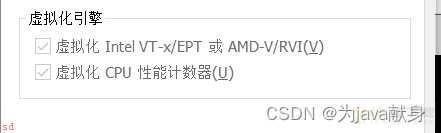

查看该计算节点是否支持虚拟化

egrep -c ;(vmx|svm); /proc/cpuinfo

0说明不支持硬件加速;需要额外的配置

1或更大则无需操作

此处为0;仍然打开nova.conf文件进行编辑

openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

如果是通过Exsi创建的虚拟机;那么需要把虚拟机电源关闭;编辑设置中;把cpu选项的虚拟化功能开启。

之后再启动虚拟机;查看

[root;manager opt]# egrep -c ;(vmx|svm); /proc/cpuinfo1

启动nova服务和依赖服务;并设置开机启动

systemctl start libvirtd systemctl enable libvirtd systemctl status libvirtd systemctl start openstack-nova-compute systemctl enable openstack-nova-compute

将计算节点增加到cell库

以下操作在manager节点执行

查看数据库中是否有新的计算节点信息

#根据自身情况source /home/hyl/admin-openrc.sh openstack compute service list --service nova-compute

[root;manager opt]# openstack compute service list --service nova-compute;----;--------------;--------;------;---------;-------;----------------------------; | ID | Binary | Host | Zone | Status | State | Updated At | ;----;--------------;--------;------;---------;-------;----------------------------; | 6 | nova-compute | master | nova | enabled | up | 2022-10-07T11:34:30.000000 | | 7 | nova-compute | worker | nova | enabled | up | 2022-10-07T11:34:32.000000 | ;----;--------------;--------;------;---------;-------;----------------------------;

自带有一个指令可以发现计算节点

su -s /bin/sh -c ;nova-manage cell_v2 discover_hosts --verbose; nova

还可以配置多久执行自动发现服务

进入/etc/nova/目录;修改nova.conf文件

[scheduler]discover_hosts_in_cells_interval = 300

同样的方法安装第2个计算节点

做简要的验证

openstack compute service list --service nova-computeopenstack catalog list openstack image list nova-status upgrade check

计算服务安装完成。

安装Neutron网络服务

以下在manager节点;controller;操作

创建neutron数据库;并赋权

CREATE DATABASE neutron;GRANT ALL PRIVILEGES ON neutron.* TO ;neutron;;;localhost; IDENTIFIED BY ;123456;; GRANT ALL PRIVILEGES ON neutron.* TO ;neutron;;% IDENTIFIED BY ;123456;; flush privileges;

创建neutron认证服务

在keystone上创建neutron用户

openstack user create --domain default --password-prompt neutron#自己定义 nt123456

将neutron添加到service项目并授予admin角色

openstack role add --project service --user neutron admin

创建neutron服务实体

openstack service create --name neutron --description ;OpenStack Networking; network

创建neutron网络服务的API端点;endpoint;

openstack endpoint create --region RegionOne network public http://manager.node:9696openstack endpoint create --region RegionOne network internal http://manager.node:9696 openstack endpoint create --region RegionOne network admin http://manager.node:9696 openstack endpoint list

安装neutron网络组件

官网说了两种方式;一种Provider networks;一种Self-service networks

这里使用Self-service networks进行安装

安装包及依赖

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

修改配置文件

主要是和网络有关的文件

openstack-config --set /etc/neutron/neutron.conf database connection mysql;pymysql://neutron:123456;manager.node/neutron openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2 openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips true openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:123456;manager.node openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://manager.node:5000 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://manager.node:5000 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers manager.node:11211 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password nt123456 openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true openstack-config --set /etc/neutron/neutron.conf nova auth_url http://manager.node:5000 openstack-config --set /etc/neutron/neutron.conf nova auth_type password openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne openstack-config --set /etc/neutron/neutron.conf nova project_name service openstack-config --set /etc/neutron/neutron.conf nova username nova openstack-config --set /etc/neutron/neutron.conf nova password nv123456 openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

检验

grep ;^[a-z]; /etc/neutron/neutron.conf

进入 /etc/neutron/plugins/ml2/目录;修改ml2_conf.ini文件

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlanopenstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000 openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

检验

grep ;^[a-z]; /etc/neutron/plugins/ml2/ml2_conf.ini

进入 /etc/neutron/plugins/ml2/目录;修改linuxbridge_agent.ini文件

#修改成自己的网卡openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:ens33 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true #修改成自己的ip openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.80.143 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

修改/etc/sysctl.conf文件;添加以下两行

net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1

进入/etc/neutron/目录;修改l3_agent.ini文件

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver linuxbridge

检验

grep ;^[a-z]; /etc/neutron/l3_agent.ini

进入/etc/neutron/目录;修改dhcp_agent.ini文件

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridgeopenstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.DNSmasq openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

检验

grep ;^[a-z]; /etc/neutron/dhcp_agent.ini

修改配置文件二

主要是和服务有关的文件;两种模式选哪个都要修改

进入/etc/neutron/目录;修改metadata_agent.ini文件

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host manager.nodeopenstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret 123456

grep ;^[a-z]; /etc/neutron/metadata_agent.ini

进入 /etc/nova/目录;修改nova.conf文件

这是在安装nova服务时遗留的一个配置

openstack-config --set /etc/nova/nova.conf neutron url http://manager.node:9696openstack-config --set /etc/nova/nova.conf neutron auth_url http://manager.node:5000 openstack-config --set /etc/nova/nova.conf neutron auth_type password openstack-config --set /etc/nova/nova.conf neutron project_domain_name default openstack-config --set /etc/nova/nova.conf neutron user_domain_name default openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne openstack-config --set /etc/nova/nova.conf neutron project_name service openstack-config --set /etc/nova/nova.conf neutron username neutron openstack-config --set /etc/nova/nova.conf neutron password nt123456 openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret 123456

创建网络插件链接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.inill /etc/neutron/

同步数据库

su -s /bin/sh -c ;neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head; neutron

启动服务和开机启动

先重启nova_api服务

systemctl restart openstack-nova-api

再启动和设置开机启动网络相关服务

systemctl start neutron-serversystemctl enable neutron-server systemctl start neutron-linuxbridge-agent systemctl enable neutron-linuxbridge-agent systemctl start neutron-dhcp-agent systemctl enable neutron-dhcp-agent systemctl start neutron-metadata-agent systemctl enable neutron-metadata-agent #如果选择Self-service networks;还需要启动如下服务 systemctl start neutron-l3-agent systemctl enable neutron-l3-agent

控制端的neutron网络服务安装完成。

计算节点

以下在计算节点;compute;操作

以下在计算节点;compute;操作

以master.node节点为例

安装neutron组件

yum install openstack-neutron-linuxbridge ebtables ipset

修改配置文件一

这里修改和服务有关的文件

进入/etc/neutron/目录;修改neutron.conf文件

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:123456;manager.nodeopenstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://manager.node:5000 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://manager.node:5000 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers manager.node:11211 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password nt123456 openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

进入/etc/nova/目录;修改nova.conf文件

修改安装nova时遗留的问题

openstack-config --set /etc/nova/nova.conf neutron url http://manager.node:9696openstack-config --set /etc/nova/nova.conf neutron auth_url http://manager.node:5000 openstack-config --set /etc/nova/nova.conf neutron auth_type password openstack-config --set /etc/nova/nova.conf neutron project_domain_name default openstack-config --set /etc/nova/nova.conf neutron user_domain_name default openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne openstack-config --set /etc/nova/nova.conf neutron project_name service openstack-config --set /etc/nova/nova.conf neutron username neutron openstack-config --set /etc/nova/nova.conf neutron password nt123456

修改配置文件二

这里修改和网络有关的文件

进入·/etc/neutron/plugins/ml2/·目录;修改·linuxbridge_agent.ini·文件

#;Self-service networks;#网卡 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:ens33 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true #ip openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.80.144 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

验证

grep ;^[a-z]; /etc/neutron/plugins/ml2/linuxbridge_agent.ini

以下两个参数的设置参考管理节点

#设置成1net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables

设置启动与自启动

重启计算服务

systemctl restart openstack-nova-compute

启动网络服务与设置自启动

systemctl start neutron-linuxbridge-agentsystemctl enable neutron-linuxbridge-agent

计算节点的网络配置完成

检查验证

以下测试在管理节点;controller;操作

neutron ext-list

[root;manager opt]# openstack network agent list;--------------------------------------;--------------------;---------;-------------------;-------;-------;---------------------------; | ID | Agent Type | Host | Availability Zone | Alive | State | Binary | ;--------------------------------------;--------------------;---------;-------------------;-------;-------;---------------------------; | 5c46ac09-4514-4dcd-88a9-d87d58b46013 | Linux bridge agent | manager | None | :-) | UP | neutron-linuxbridge-agent | | b190fbb0-5229-40d7-8896-71eb3e69409c | DHCP agent | manager | nova | :-) | UP | neutron-dhcp-agent | | b22a1940-8b3f-48fe-92e3-127de1cf795f | Linux bridge agent | master | None | :-) | UP | neutron-linuxbridge-agent | | bf948ce5-e035-41d7-a802-eeb4c3127024 | Metadata agent | manager | None | :-) | UP | neutron-metadata-agent | | e3a76c5d-ef11-46d7-a642-f6096fa7a389 | Linux bridge agent | worker | None | :-) | UP | neutron-linuxbridge-agent | ;--------------------------------------;--------------------;---------;-------------------;-------;-------;---------------------------;

正常情况下;控制节点有3个服务;计算节点有1个服务

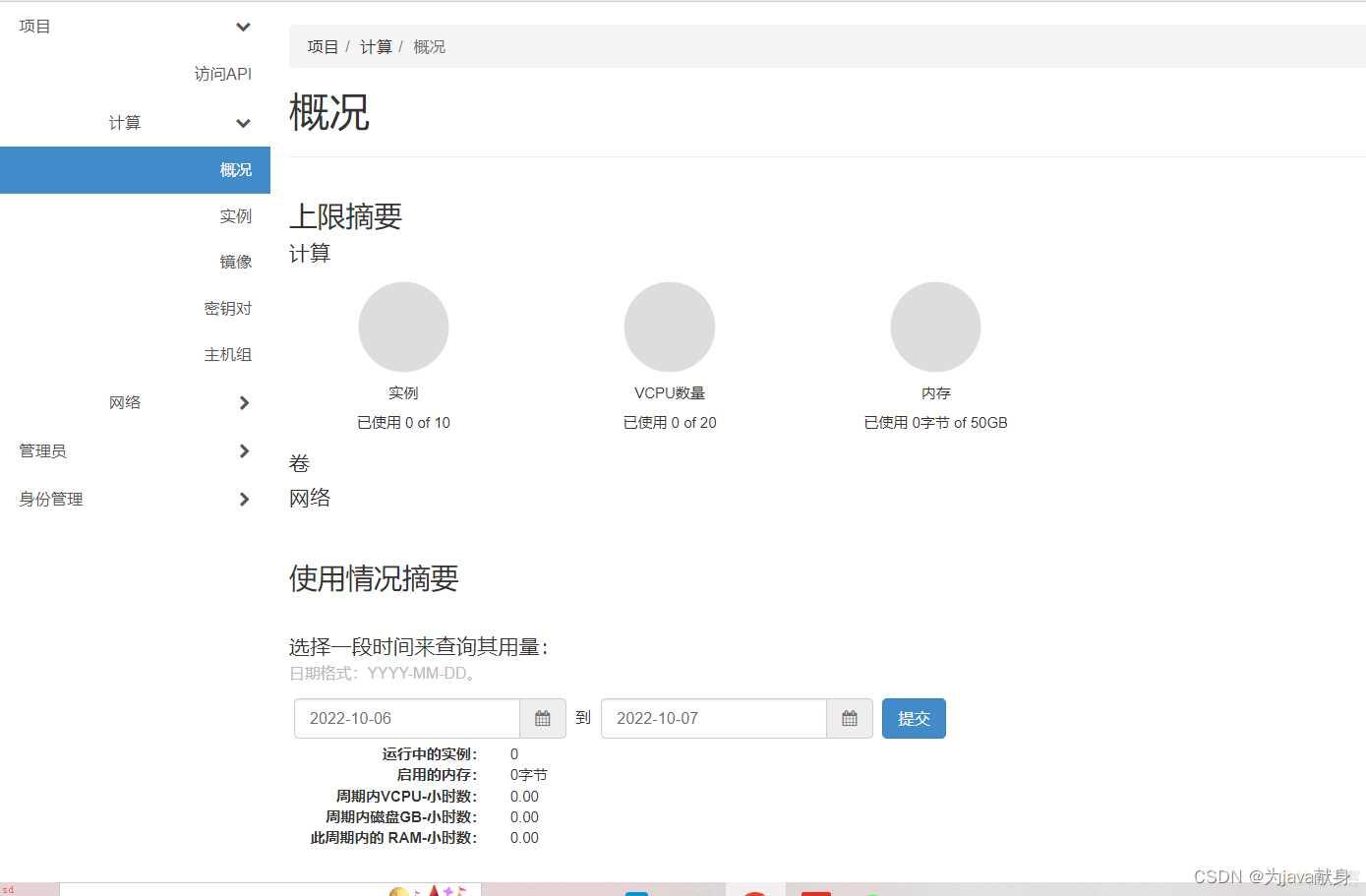

安装dashboard面板

在manager节点;controller;操作。

安装包

修改配置文件

进入 /etc/openstack-dashboard/目录;修改local_settings文件,不要直接复制添加

# vim /etc/openstack-dashboard/local_settings OPENSTACK_HOST = ;manager.node; OPENSTACK_KEYSTONE_URL = ;http://%s:5000/v3; % OPENSTACK_HOST #通过仪表盘创建的用户默认角色配置为 user OPENSTACK_KEYSTONE_DEFAULT_ROLE = ;user; ALLOWED_HOSTS = [;*;, ;localhost;] SESSION_ENGINE = ;django.contrib.sessions.backends.cache; #需要添加 #配置memcached存储服务 CACHES = { ;default;: { ;BACKEND;: ;django.core.cache.backends.memcached.MemcachedCache;, ;LOCATION;: ;manager.node:11211;, }, } OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True #配置API版本 OPENSTACK_API_VERSIONS = { ;identity;: 3, ;image;: 2, ;volume;: 2, } #通过仪表盘创建用户时的默认域配置为 default OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = ;Default; #如果选择网络参数1;禁用支持3层网络服务; OPENSTACK_NEUTRON_NETWORK = { ... ;enable_router;: False, ;enable_quotas;: False, ;enable_distributed_router;: False, ;enable_ha_router;: False, ;enable_lb;: False, ;enable_firewall;: False, ;enable_vpn;: False, ;enable_fip_topology_check;: False, } #可以选择性地配置时区;不能用CST否则无法启动httpd服务 TIME_ZONE = ;Asia/Shanghai;

进入/etc/httpd/conf.d/目录;修改openstack-dashboard.conf文件

如果没有下面一行代码;则加入

WSGIApplicationGroup %{GLOBAL}

重启服务

重启web服务和会话存储服务

systemctl restart httpdsystemctl restart memcached

检查dashboard可用

浏览器上输入http://192.168.80.143:80/dashboard出现登陆界面。

域;default

用户名;admin

密码;ks123456

密码是keystone-manage bootstrap指令里指定的;openrc文件里也有

参考文章

https://docs.openstack.org/install-guide/openstack-services.html

https://blog.csdn.net/QQ_38773184/article/details/82391073

https://blog.csdn.net/xinfeiyang060502118/article/details/102514114

https://blog.csdn.net/zz_aiytag/article/details/104390440?spm=1001.2014.3001.5506

加载全部内容