Pecemaker+Corosync+Haproxy高可用Openstack集群实战

作者:快盘下载 人气:38一、DRBD简介

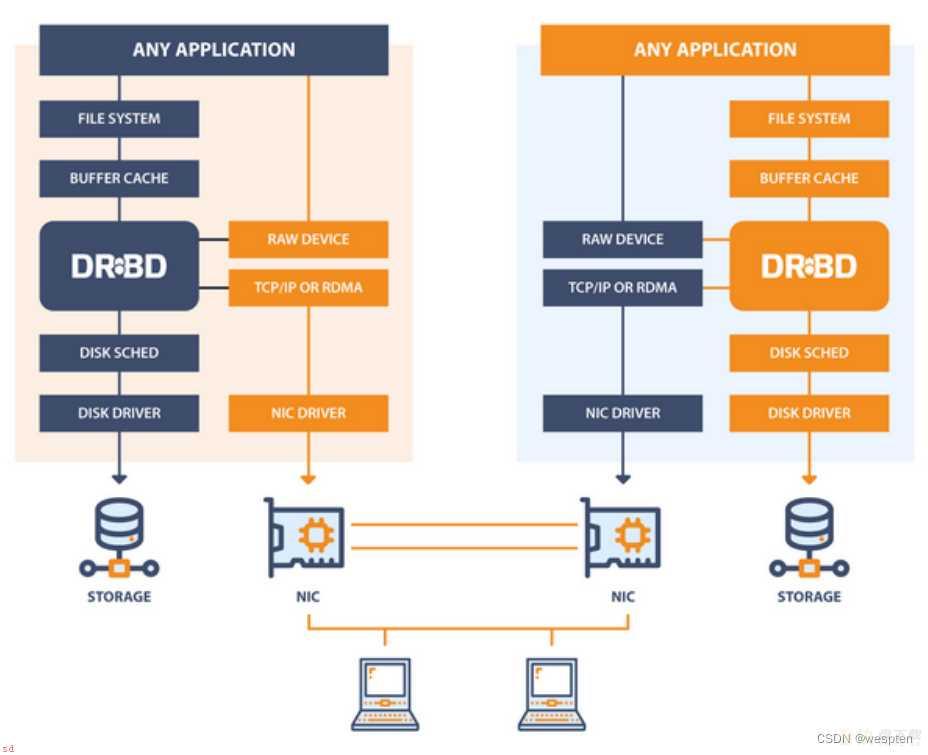

DRBD的全称为;Distributed Replicated Block Device (DRBD)分布式块设备复制;DRBD是由内核模块和相关脚本而构成;用以构建高可用性的集群。其实现方式是通过网络来镜像整个设备。它允许用户在远程机器上建立一个本地块设备的实时镜像。与心跳连接结合使用;也可以把它看作是一种网络RAID。

DRBD replication; DRBD synchronization DRBD负责接收数据;把数据写到本地磁盘;然后发送给另一个主机。另一个主机再将数据存到自己的磁盘中。目前;DRBD每次只允许对一个节点进行读写访问;这对于通常的故障切换高可用性集群来讲已经足够用了。以后的版本将支持两个节点进行读写存取。

一个DRBD系统由两个以上节点构成;与HA集群类似;也有主用节点和备用节点之分;在带有主要设备的节点上;应用程序和操作系统可以运行和访问DRBD设备。

在主节点写入的数据通过drbd设备存储到主节点的磁盘设备中;同时;这个数据也会自动发送到备用节点相应的drbd设备;最终写入备用节点的磁盘设备中;在备用节点上;drbd只是将数据从drbd设备写入到备用节点的磁盘设备中。

大部分现行高可用性集群都会使用共享存储;而DRBD也可以作为一个共享存储设备;使用DRBD不需要任何硬件的投资。因为它在IP网络中运行;IP SAN;;因此;利用DRBD作为共享存储设备;要节约很多成本;因为在价格上IP网络要比专用的存储网络经济的多。

在 DRBD 设备上创建文件系统之前;必须先设置DRBD设备。只能通过/dev/drbd设备;而非原始设备;操纵用户数据;因为DRBD使用原始设备的最后 128 MB 储存元数据。确保仅在 /dev/drbd设备上创建文件系统;而不在原始设备上创建。主机上的DRBD设备挂载到一个目录上进行使用.备机的DRBD设备无法被挂载,因为它是用来接收主机数据的;由DRBD负责操作。

DRBD是linux的内核的存储层中的一个分布式存储系统;可用使用DRBD在两台linux服务器之间共享块设备;共享文件系统和数据。类似于一个网络RAID1的功能;如图所示;

官方网站;DRBD - LINBIT

文档;User Guides and Product Documentation - LINBIT

二、DRBD 安装配置

10.163.1.0/24;管理网络。

172.16.10.0/24;为存储网络(VLAN10) 。

1、基础配置

#防火墙和selinux sed -i ;s/SELINUX=enforcing/SELINUX=permissive/; /etc/selinux/config setenforce 0 systemctl disable firewalld --now #drbd1 hostnamectl set-hostname drbd1 nmcli con add con-name bond1 ifname bond1 type bond mode active-backup ipv4.method disabled ipv6.method ignore nmcli con add con-name bond1-port1 ifname ens4 type bond-slave master bond1 nmcli con add con-name bond1-port2 ifname ens5 type bond-slave master bond1 nmcli con add con-name bond1-vlan10 ifname bond1-vlan10 type vlan id 10 dev bond1 ipv4.address 172.16.10.1/24 ipv4.method man #drbd2 hostnamectl set-hostname drbd2 nmcli con add con-name bond1 ifname bond1 type bond mode active-backup ipv4.method disabled ipv6.method ignore nmcli con add con-name bond1-port1 ifname ens4 type bond-slave master bond1 nmcli con add con-name bond1-port2 ifname ens5 type bond-slave master bond1 nmcli con add con-name bond1-vlan10 ifname bond1-vlan10 type vlan id 10 dev bond1 ipv4.address 172.16.10.2/24 ipv4.method man #drbd3 hostnamectl set-hostname drbd3 nmcli con add con-name bond1 ifname bond1 type bond mode active-backup ipv4.method disabled ipv6.method ignore nmcli con add con-name bond1-port1 ifname ens4 type bond-slave master bond1 nmcli con add con-name bond1-port2 ifname ens5 type bond-slave master bond1 nmcli con add con-name bond1-vlan10 ifname bond1-vlan10 type vlan id 10 dev bond1 ipv4.address 172.16.10.3/24 ipv4.method man #h3c-switch1 sys int range ge1/0 ge2/0 ge3/0 ge4/0 p l b p l t p t p v a vlan 10 exit save #h3c-switch2 sys int range ge1/0 ge2/0 ge3/0 ge4/0 p l b p l t p t p v a vlan 10 exit s ave #drbd1~3软件仓库配置 #截止到2022年2月17号;centos8的软件仓库已经下架;所以将centos8的软件仓库切换成centos- stream8的软件仓库 rm -rf /etc/yum.repos.d/* cat > /etc/yum.repos.d/iso.repo <<END [AppStream] name=AppStream baseurl=http://mirrors.163.com/centos/8-stream/AppStream/x86_64/os/ enabled=1 gpgcheck=0 [BaseOS] name=BaseOS baseurl=http://mirrors.163.com/centos/8-stream/BaseOS/x86_64/os/ enabled=1 gpgcheck=0 [epel] name=epel baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/8/everything/x86_64/ gpgcheck=0 END yum clean all yum makecache #解析文件设置 cat > /etc/hosts <<END 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.10.1 drbd1 172.16.10.2 drbd2 172.16.10.3 drbd3 END

2、DRBD双节点部署

#drbd1~drbd2 yum -y group install ;Development Tools; && yum -y install drbd wget vim net- tools lvm2 wget https://pkg.linbit.com/downloads/drbd/9.0/drbd-9.0.32-1.tar.gz tar xf drbd-9.0.32-1.tar.gz yum update kernel -y && reboot -f cd drbd-9.0.32-1 && make && make install modprobe drbd echo drbd > /etc/modules-load.d/drbd.conf sed -i ;/options/aauto-promote yes;; /etc/drbd.d/global_common.conf sed -i ;/net/aprotocol C;; /etc/drbd.d/global_common.conf pvcreate /dev/vdb vgcreate nfs /dev/vdb lvcreate -n nfs -L 2G nfs #resource配置文件 cat > /etc/drbd.d/nfs.res <<END resource nfs { meta-disk internal; device /dev/drbd1; net { verify-alg sha256; } on drbd1 { address 172.16.10.1:7788; disk /dev/nfs/nfs; } on drbd2 { address 172.16.10.2:7788; disk /dev/nfs/nfs; } } END #创建drbd资源 drbdadm create-md nfs drbdadm up nfs drbdadm primary nfs --force #格式化drbd1 mkfs.xfs /dev/drbd1

3、DRBD三节点部署

cat > /etc/drbd.d/nfs.res <<END resource nfs { meta-disk internal; device /dev/drbd1; net { verify-alg sha256; } on drbd1 { node-id 1; address 172.16.10.1:7788; disk /dev/nfs/nfs; } on drbd2 { node-id 2; address 172.16.10.2:7788; disk /dev/nfs/nfs; } on drbd3 { node-id 3; address 172.16.10.3:7788; disk /dev/nfs/nfs; } connection { host drbd1 port 7001; host drbd2 port 7010; } connection { host drbd1 port 7012; host drbd3 port 7021; } connection { host drbd2 port 7002; host drbd3 port 7020; } END

三、Pacemaker 概述

1、集群管理软件介绍

集群资源管理软件种类众多;并有商业软件与开源软件之分。在传统业务系统的高可用架构中;商业集群管理软件的使用非常普遍;如IBM的集群系统管理器、PowerHA SystemMirror;也称为HACMP)以及针对DB2的 purescale数据库集群软件。

再如orcale的Solaris Cluster系列集群管理软件;以及Oracle数据库的ASM和RAC集群管理软件等商业高可用集群软件都在市场上占有很大的比例。此外;随着开源社区的发展和开源生态系统的扩大;很多商业集群软件也正在朝着开源的方向发展;如IBM开源的xCAT集软件而在Linux开源领域; Pacemaker/Corosync、 HAproxy/Keepalived等组合集群资源管理软件也有着极为广泛的应用。

2、Pacemaker简介

Pacemaker是Linux环境中使用最为广泛的开源集群资源管理器;Pacemaker利用集群基础架构(Corosync或者Heartbeat)提供的消息和集群成员管理功能;实现节点和资源级别的;故障检测;和;资源恢复;;从而最大程度保证集群服务的高可用。从逻辑功能而言;pacemaker在集群管理员所定义的;资源规则;驱动下;负责集群中;软件服务的全生命周期管理;;这种管理甚至包括整个软件系统以及软件系统彼此之间的交互。

Pacemaker在实际应用中可以管理任何规模的集群;由于其具备强大的资源依赖模型;这使得集群管理员能够精确描述和表达集群资源之间的关系;包括资源的顺序和位置等关系;。

同时;对于任何形式的软件资源;通过为其自定义资源启动与管理脚本;;资源代理;;;几乎都能作为资源对象而被Pacemaker管理。此外;需要指出的是;Pacemaker仅是资源管理器;;并不提供集群心跳信息;;由于任何高可用集群都必须具备心跳监测机制;因而很多初学者总会误以为Pacemaker本身具有心跳检测功能;而事实上Pacemaker的心跳机制主要基于Corosync或Heartbeat来实现。

从起源上来看;Pacemaker是为Heartbeat项目而开发的CRM项目的延续;CRM最早出现于2003年;是专门为Heartbeat项目而开发的集群资源管理器;而在2005年;随着Heartbeat2.0版本的发行才正式推出第一版本的CRM,即Pacemaker的前身。在2007年末;CRM正式从Heartbeat2.1.3版本中独立;之后于2008年Pacemaker0.6稳定版本正式发行;随后的2010年3月CRM项目被终止;作为CRM项目的延续;Pacemaker被继续开发维护;如今Pacemaker已成为开源集群资源管理器的事实标准而被广泛使用。

3、Hearteat子项目

Heartbeat到了3.0版本后已经被拆分为几个子项目了;这其中便包括Pacemaker、Heartbeat3.0、 Cluster Glue和 Resource Agent。

1. Heartbeat

Heartbeat项目最初的消息通信层被独立为新的Heartbeat项目;新的Heartbeat只负责维护集群各节点的 信息以及它们之间的心跳通信;通常将Pacemaker与Heartbeat或者Corosync共同组成集群管理软件;Pacemaker利用Heartbeat或者Corosync提供的节点及节点之间的心跳信息来判断节点状态。

2. Cluster Clue

Cluster Clue相当于一个中间层;它用来将Heartbeat和Pacemaker关联起来;主要包含两个部分;即本地资源管理器;Local Resource Manager;LRM;和Fencing设备;Shoot The Other Node In The Head;STONITH;

3. Resource Agent

资源代理;Resource Agent;RA;是用来控制服务的启停;监控服务状态的脚本集合;这些脚本会被位于本节点上的LRM调用从而实现各种资源的启动、停止、监控等操作。

4. Pacemaker

Pacemaker是整个高可用集群的控制中心;用来管理整个集群的资源状态行为;客户端通过pacemaker来配置、管理、监控整个集群的运行状态。Pacemaker是一个功能非常强大并支持众多操作系统的开源集群资源管理器;Pacemaker支持主流的Linux系统;如Redhat的 RHEL系列、Fedora系列、 openSUSE系列、 Debian系列、 Ubuntu系列和 centos系列;这些操作系统上都可以运行Pacemaker并将其作为集群资源管理器。

4、Pacemaker功能

pacemaker的主要功能包括以下几方面;

1. 监测并恢复节点和服务级别的故障。

2. 存储无关;并不需要共享存储。

3. 资源无关;任何能用脚本控制的资源都可以作为集群服务。

4. 支持节点STONITH功能以保证集群数据的完整性和防止集群脑裂。

5. 支持大型或者小型集群。

6. 支持Quorum机制和资源驱动类型的集群。

7. 支持几乎是任何类型的冗余配置。

8. 自动同步各个节点的配置文件。

9. 可以设定集群范围内的Ordering、 Colocation and Anti-colocation等约束。

10. 高级服务类型支持;例如;

;1;Clone功能;即那些要在多个节点运行的服务可以通过Clone功能实现;Clone功能将会在多个节点上启动相同的服务;

;2;Multi-state功能;即那些需要运行在多状态下的服务可以通过Multi-state实现;在高可用集群的服务中;有很多服务会运行在不同的高可用模式下;如;Active/Active模式或者 Active/passive模式等;并且这些服务可能会在Active与standby(Passive)之间切换。

11. 具有统一的、脚本化的集群管理工具。

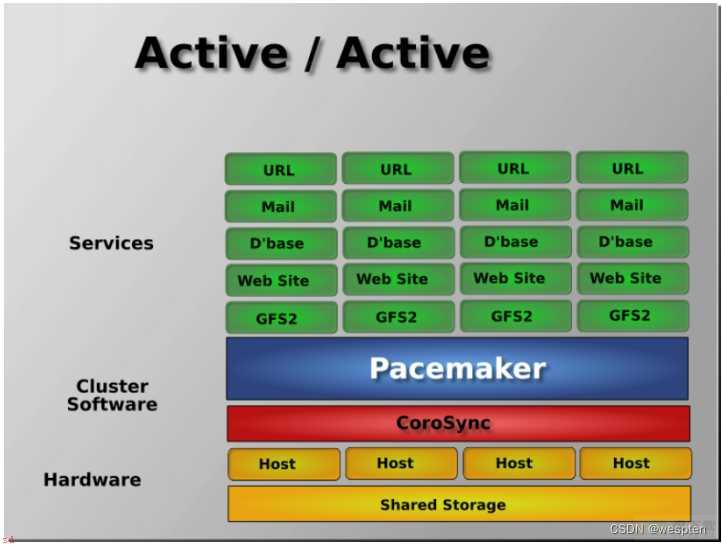

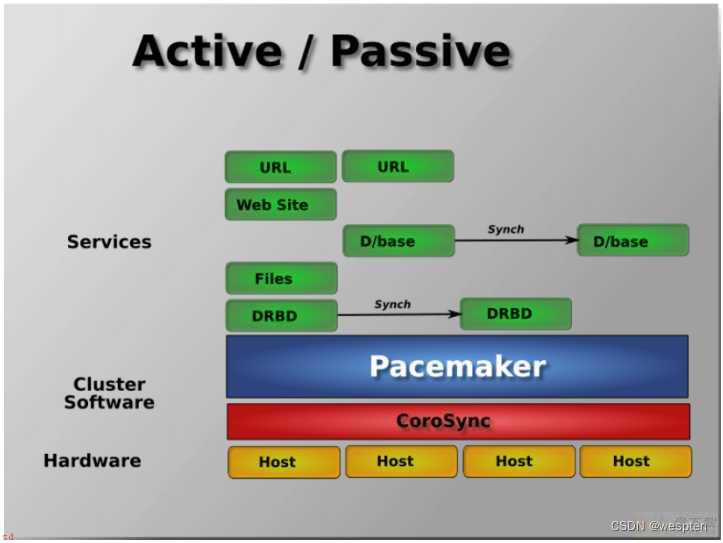

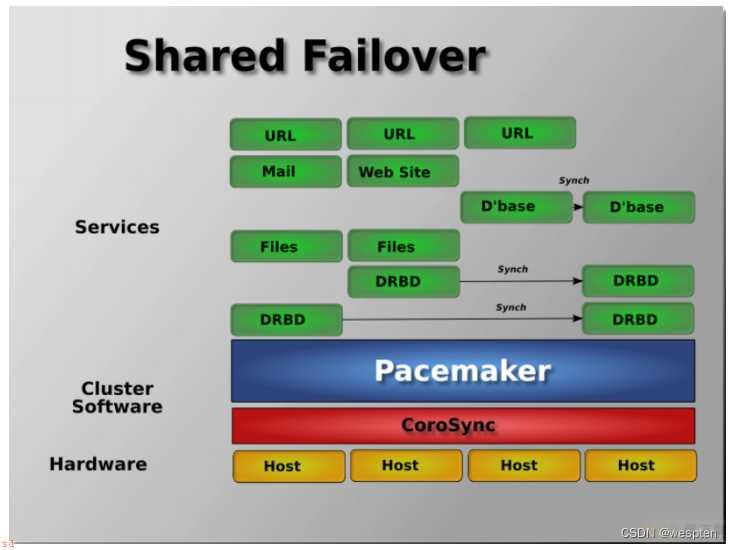

5、Pacemaker服务类型

Pacemaker对用户的环境没有特定的要求;这使得它支持任何类型的高可用节点冗余配置;包括Active/Active、Active/Passive、N;1、N;M、N-to-1 and N-to-N模式的高可用集群;用户可以根据自身对业务的高可用级别要求和成本预算;通过Pacemaker部署适合自己的高可用集群。

1. Active/Active模式

在这种模式下;故障节点上的访问请求或自动转到另外一个正常运行节点上;或通过负载均衡器在剩余的正常运行的节点上进行负载均衡。这种模式下集群中的节点通常部署了相同的软件并具有相同的参数配置;同时各服务在这些节点上并行运行。

2. Active/Passive模式

在这种模式下;每个节点上都部署有相同的服务实例;但是正常情况下只有一个节点上的服务实例处于激活状态;只有当前活动节点发生故障后;另外的处于standby状态的节点上的服务才会被激活;这种模式通常意味着需要部署额外的且正常情况下不承载负载的硬件。

3. N;1模式

所谓的N;1就是多准备一个额外的备机节点;当集群中某一节点故障后该备机节点会被激活从而接管故障节点的服务。在不同节点安装和配置有不同软件的集群中;即集群中运行有多个服务的情况下;该备机节点应该具备接管任何故障服务的能力;而如果整个集群只运行同一个服务;则N;1模式便退变为Active/Passive模式。

4. N;M模式

在单个集群运行多种服务的情况下;N;1模式下仅有的一个故障接管节点可能无法提供充分的冗余;因此;集群需要提供M;M>l;个备机节点以保证集群在多个服务同时发生故障的情况下仍然具备高可用性;M的具体数目需要根据集群高可用性的要求和成本预算来权衡。

5. N-to-1模式

在N-to-1模式中;允许接管服务的备机节点临时成为活动节点;此时集群已经没有备机节点;;但是;当故障主节点恢复并重新加入到集群后(failover);备机节点上的服务会转移到主节点上运行;同时该备机节点恢复standby状态以保证集群的高可用。

6. N-to-N模式

N-to-N是Active/Active模式和N;M模式的结合;N-to-N集群将故障节点的服务和访问请求分散到集群其余的正常节点中;在N-to-N集群中并不需要有Standby节点的存在、但是需要所有Active的节点均有额外的剩余可用资源。

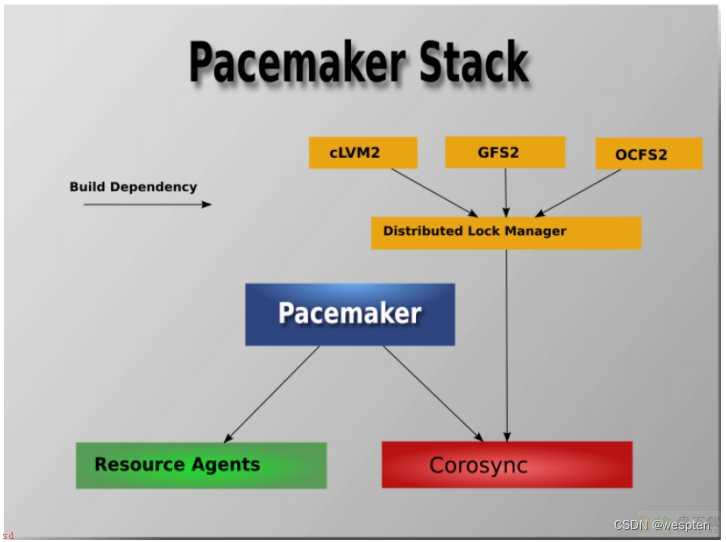

6、Pacemaker架构

从高层次的集群抽象功能来看;Pacemaker的核心架构主要由集群;不相关组件;、;集群资源管理组件;和;集群底层基础模块;三个部分组成。

1. 底层基础模块;corosync;

底层的基础架构模块主要向集群提供可靠的消息通信、集群成员关系和等功能;底层基础模块主要包括像corosync、CMAN和Heartbeat等项目组件。

2. 集群无关组件;RA;

在Pacemaker架构中;这部分组件主要包括资源本身以及用于;启动;、;关闭;以及;监控;资源状态的脚本;同时还包括用于屏蔽和消除实现这些脚本所采用的不同标准之间差异的本地进程。虽然在运行多个实例时;资源彼此之间的交互就像一个分布式的集群系统;但是;这些实例服务之间仍然缺乏恰当的HA机制和独立于资源的集群治理能力;因此还需要后续集群组件的功能支持。

3. 资源管理

Pacemaker就像集群大脑;专门负责;响应;和;处理;与集群相关的事件;这些事件主要包括集群节点的;加入;、集群节点;脱离;;以及由资源故障、维护、计划的资源相关操作所引起的资源事件;同时还包括其他的一些管理员操作事件;如对配置文件的修改和服务重启等操作。在对所有这些事件的响应过程中;Pacemaker会计算出当前集群应该实现的最佳理想状态并规划出实现该理想状态后续需要进行的各种集群操作;这些操作可能包括了资源移动、节点停止;甚至包括使用远程电源管理模块来强制节点下线等。

当Pacemaker与Corosync集成时;Pacemaker也支持常见的主流开源集群文件系统;而根据集群文件系统社区过去一直从事的标准化工作;社区使用了一种通用的分布式锁管理器来实现集群文件系统的并行读写访问;这种分布式锁控制器利用了Corosync所提供的集群消息和集群成员节点处理能力;节点是在线或离线的状态;来实现文件系统集群;同时使用Pacemaker来对服务进行隔离。

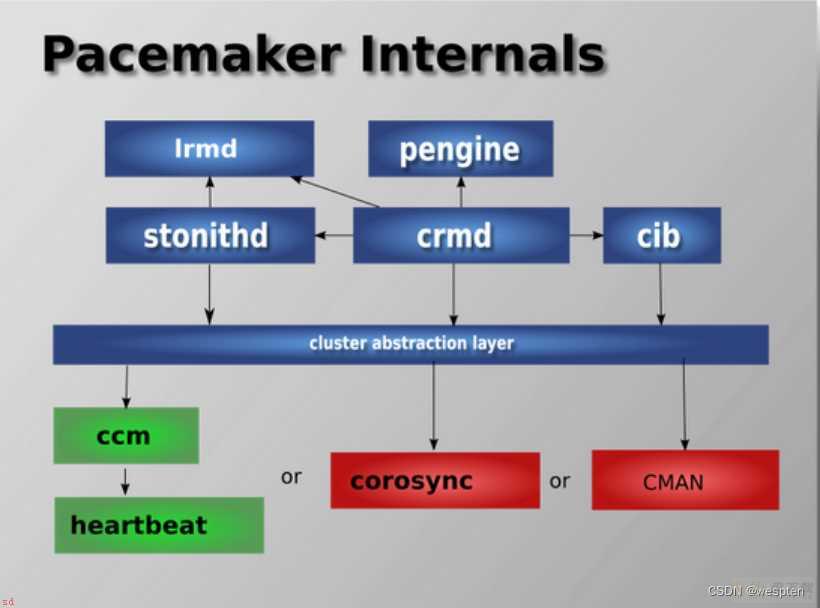

7、Pacemaker组件

Pacemaker作为一个独立的集群资源管理器项目;其本身由多个内部组件构成;这些内部组件彼此之间相互通信协作并最终实现了集群的资源管理;Pacemaker项目由五个内部组件构成;各个组件之间的关系如右图所示。

CIB;集群信息基础; Cluster Information Base;。

CRMd;集群资源管理进程;Cluster Resource Manager deamon;。

LRMd;本地资源管理进程(Local Resource Manager deamon;。

PEngine(PE);策略引擎(PolicyEngine;。

STONITHd;集群Fencing进程;Shoot The Other Node In The Head deamon;。

CIB主要负责集群最基本的信息配置与管理;Pacemaker中的CIB主要使用;XML;的格式来显示集群的配置信息和集群所有资源的当前状态信息。CIB所管理的配置信息会自动在集群节点之间进行同步;PE将会使用CIB所提供的集群信息来规划集群的最佳运行状态。并根据当前CIB信息规划出集群应该如何控制和操作资源才能实现这个最佳状态;在PE做出决策之后;会紧接着发出资源操作指令;而PE发出的指令列表最终会被转交给集群最初选定的控制器节点;Designated controller;DC);通常DC便是运行Master CRMd的节点。

在集群启动之初;pacemaker便会选择某个节点上的CRM进程实例来作为集群Master CRMd,然后集群中的CRMd便会集中处理PE根据集群CIB信息所决策出的全部指令集。在这个过程中;如果作为Master的 CRM进程出现故障或拥有 Master CRM进程的节点出现故障;则集群会马上在其他节点上重新选择一个新的Master CRM进程。

在PE的决策指令处理过程中;DC会按照指令请求的先后顺序来处理PEngine发出的指令列表;简单来说;DC处理指令的过程就是把指令发送给本地节点上的LRMd;当前节点上的CRMd已经作为Master在集中控制整个集群;不会再并行处理集群指令;或者通过集群消息层将指令发送给其他节点上的CRMd进程;然后这些节点上的CRMd再将指令转发给当前节点的LRMd去处理。

当集群节点运行完指令后;运行有CRMd进程的其他节点会把他们接收到的全部指令执行结果以及日志返回给DC;即DC最终会收集全部资源在运行集群指令后的结果和状态;;然后根据执行结果的实际情况与预期的对比;从而决定当前节点是应该等待之前发起的操作执行完成再进行下一步的操作;还是直接取消当前执行的操作并要求PEngine根据实际执行结果再重新规划集群的理想状态并发出操作指令。

在某些情况下;集群可能会要求节点关闭电源以保证共享数据和资源恢复的完整性;为此;Pacemaker引入了节点隔离机制;而隔离机制主要通过STONITH进程实现。STONITH是一种强制性的隔离措施;STONINH功能通常是依靠控制远程电源开关以关闭或开启节点来实现。在Pacemaker中;STONITH设备被当成资源模块并被配置到集群信息CIB中;从而使其故障情况能够被轻易地监控到。

同时;STONITH进程;STONITHd)能够很好地理解STONITH设备的拓扑情况;因此;当集群管理器要隔离某个节点时;只需STONITHd的客户端简单地发出Fencing某个节点的请求;STONITHd就会自动完成全部剩下的工作;即配置成为集群资源的STONITH设备最终便会响应这个请求;并对节点做出Fenceing操作;而在实际使用中;根据不同厂商的服务器类型以及节点是物理机还是虚拟机;用户需要选择不同的STONITH设备。

四、Pacemaker集群资源管理

1、Pacemaker集群管理工具pcs

可以用用cibadmin命令行工具来查看和管理pacemaker的集群配置信息;集群CIB中的配置信息量非常大而且以XML语言呈现;对于仅由极少数节点和资源所组成的集群;cibadmin也许是个可行方案。

但是;对于拥有大量节点和资源的大规模集群;通过编辑XML文件来查看修改集群配置显然是非常艰难而且极为不现实的工作由于XML文件内容条目极多;因此用户在修改XML文件的过程中极易出现人为错误。而在开源社区里;简单实用才是真正开源精神的体现;对于开源系统中任何文件配置参数的修改;简化统一的命令行工具才是最终的归宿。

随着开源集群软件Pacemaker版本的不断更新;社区推出了两个常用的集群管理命令行工具;即集群管理员最为常用的;pcs;和;crmsh;命令。本文使用的是pcs命令行工具;关于crmsh的更多使用方法和手册可以参考Pacemaker的官方网站。

在pacemaker集群中PCS命令行工具几乎可以实现集群管理的各种功能;例如;全部受控的pacemaker和配置属性的变更管理都可以通过pcs实现。此外;需要注意的是;pcs命令行的使用对系统中安装的pacemaker和corosync软件版本有一定要求;即Pacemaker1.1.8及其以上版本; Corosync 2.0及其以上版本才能使用pcs命令行工具进行集群管理。

2、Pacemaker集群资源管理

1. 集群资源代理介绍

在pacemaker高可用集群中;资源就是集群所维护的高可用服务对象。根据用户的配置;资源有不同的种类;其中最为简单的资源是原始资源(primitive Resource),此外还有相对高级和复杂的资源组(Resource Group)和克隆资源(Clone Resource)等集群资源概念。

在Pacemaker集群中;每一个原始资源都有一个资源代理(Resource Agent, RA);RA是一个与资源相关的外部脚本程序;该程序抽象了资源本身所提供的服务并向集群呈现一致的视图以供集群对该资源进行操作控制。通过RA;几乎任何应用程序都可以成为Pacemaker集群的资源从而被集群资源管理器和控制。

RA的存在;使得集群资源管理器可以对其所管理的资源;不求甚解;;即集群资源管理器无需知道资源具体的工作逻辑和原理;RA已将其封装;;资源管理器只需向RA发出start、stop、Monitor等命令;RA便会执行相应的操作。从资源管理器对资源的控制过程来看;集群对资源的管理完全依赖于该资源所提供的;即资源的RA脚本功能直接决定了资源管理器可以对该资源进行何种控制;因此一个功能完善的RA在发行之前必须经过充分的功能测试。

在多数情况下;资源RA以shell脚本的形式提供;当然也可以使用其他比较流行的如c、python、perl等语言来实现RA。

在pacemaker集群中;资源管理器支持不同种类的资源代理;这些受支持的资源代理包括OCF、LSB、 Upstart、 systemd、 service、 Fencing、 Nagios Plugins;而在Linux系统中;最为常见的有 OCF(open Cluster Framework)资源代理 LSB;Linux standard Base)资源代理、systemd和 service资源代理。

1;OCF

OCF是开放式集群框架的简称;从本质上来看;OCF标准其实是对LSB标准约定中init脚本的一种延伸和扩展。OCF标准支持参数传递、自我功能描述以及可扩展性;此外;OCF标准还严格定义了操作执行后的返回代码;集群资源管理器将会根据0资源代理返回的执行代码来对执行结果做出判断。

因此;如果OCF脚本错误地提供了与操作结果不匹配的返回代码;则执行操作后的集群资源行为可能会变得莫名其妙;而对于不熟悉OCF脚本的用户;这将会是个非常困惑和不解的问题;尤其是当集群依赖于OCF返回代码来在资源的完全停止状态、错误状态和不确定状态之间进行判断的时候。因此;在OCF脚本发行使用之前一定要经过充分的功能测试;否则有问题的OCF脚本将会扰乱整个集群的资源管理。在Pacemaker集群中;OCF作为一种可以自我描述和高度灵活的行业标准;其已经成为使用最多的资源类别。

2;LSB

LSB是最为传统的Linux“资源标准之一;例如在Redhat的RHEL6及其以下版本中;或者对应的centos版本中;;经常在/etc/init.d目录下看到的资源启动脚本便是LSB标准的资源控制脚本。

通常;LSB类型的脚本是由操作系统的发行版本提供的;而为了让集群能够使用这些脚本;它们必须遵循LSB的规定;LSB类型的资源是可以配置为系统启动时自动启动的;但是如果需要通过集群资源管理器来控制这些资源;则不能将其配置为自动启动;而是由集群根据策略来自行启动。

3;Systemd

在很多Linux的最新发行版本中;systemd被用以替换传统;sysv;风格的系统启动初始化进程和脚本;如在Redhat的RHEL7和对应的centos7操作系统中;systemd已经完全替代了sysvinit启动系统;同时systemd提供了与sysvinit以及LSB风格脚本兼容的特性;因此老旧系统中已经存在的服务和进程无需修改便可使用systemd在systemd中;服务不再是/etc/init.d目录下的shell脚本;而是一个单元文件;unit-file;;Systemd通过单元文件来启停和控制服务;Pacemaker提供了管理Systemd类型的应用服务的功能。

4;Service

Service是Pacemaker支持的一种特别的服务别名;由于系统中存在各种类型的服务;如LSB、Systemd和OCF);Pacemaker使用服务别名的方式自动识别在指定的集群节点上应该使用哪一种类型的服务。当一个集群中混合有Systemd、LSB和OCF类型资源的时候;Service类型的资源代理别名就变得非常有用;例如在存在多种资源类别的情况下;Pacemaker将会自动按照LSB、Systemd、Upstart的顺序来查找启动资源的脚本。

在pacemaker中;每个资源都具有属性;资源属性决定了该资源RA脚本的位置;以及该资源隶属于哪种资源标准。例如;在某些情况下;用户可能会在同一系统中安装不同版本或者不同来源的同一服务;如相同的RabbitMQ Cluster安装程序可能来自RabbitMQ官方社区也可能来自Redhat提供的RabbitMQ安装包;;在这个时候;就会存在同一服务对应多个版本资源的情况;为了区分不同来源的资源;就需要在定义集群资源的时候通过资源属性来指定具体使用哪个资源。

在pacemaker集群中;资源属性由以下几个部分构成;

Resource_id;用户定义的资源名称。

Standard;脚本遵循的标准;允许值为OCF、Service、Upstart、Systemd、LSB、Stonith。

Type;资源代理的名称;如常见的IPaddr便是资源的。

Provider;OCF规范允许多个供应商提供同一资源代理;Provider即是指资源脚本的提供者;多数OCF规范提供的资源代均使用Heartbeat作为Provider。

2. 集群资源约束

集群是由众多具有特定功能的资源组成的集合;集群中的每个资源都可以对外提供独立服务;但是资源彼此之间存在依赖与被依赖的关系。

如资源B的启动必须依赖资源A的存在;因此资源A必须在资源B之前启动;再如资源A必须与资源B位于同一节点以共享某些服务;则资源B与A在故障切换时必须作为一个逻辑整体而同时迁移到其他节点;在pacemaker中;资源之间的这种关系通过资源约束或限制;Resource constraint)来实现。pacemaker集群中的资源约束可以分为以下几类;

1.位置约束(Location);位置约束限定了资源应该在哪个集群节点上启动运行。

2.顺序约束(Order);顺序约束限定了资源之间的启动顺序。

3.资源捆绑约束(Colocation);捆绑约束将不同的资源捆绑在一起作为一个逻辑整体;即资源A位于c节点;则资源B也必须位于c节点;并且资源A、B将会同时进行故障切换到相同的节点上。

localtion示例;

在资源配置中;Location约束在限定运行资源的节点时非常有用;例如在Openstack高可用集群配置中;我们希望Nova-ompute资源仅运行在计算节点上;而nova-api和Neutron-sever等资源仅运行在控制节点上;这时便可通过资源的Location约束来实现。

例如;我们先给每一个节点设置不同的osprole(openstack role)属性;属性名称可自定义;;计算节点中该值设为compute;控制节点中该值设为controller;如下;

pcs property set --node computel osprole=compute pcs property set --node computel osprole=compute pcs property set --node controller1 osprole=controller pcs property set --node controller2 osprole=controller pcs property set --node controller3 osprole=controller

然后;通过为资源设置Location约束;便可将Nova-compute资源仅限制在计算节点上运行;Location约束的设置命令如下;

pcs constraint location nova-compute-clone rule resource-discovery=exclusive score=0 osprole eq compute即资源nova-compute-clone仅会在osprole等于compute的节点上运行;也即计算节点上运行。

order示例;

在pacemaker集群中;order约束主要用来解决资源的启动依赖关系;资源启动依赖在Linux系统中非常普遍。例如在Openstack高可用集群配置中;需要先启动基础服务如RabbitMQ和mysql等;才能启动Openstack的核心服务;因为这些服务都需要使用消息队列和数据库服务。

再如在网络服务Neutron中;必须先启动Neutron-server服务;才能启动Neutron的其他Agent服务;因为这些Agent在启动时均会到Neutron-sever中进行服务注册。Pacemaker集群中解决资源启动依赖的方案便是order约束。

例如;在openstack的网络服务Neutron配置中;与Neutron相关的资源启动顺序应该如下;Keystone->Neutron-server->Neutron-ovs-cleanup->Neutron-netns-cleanup->Neutron-openvswitch-agent->Neutron-dncp-agent->Neutron-l3-agent。

上述依赖关系可以通过如下Order约束实现;

pcs constraint order start keystone-clone then neutron-server-api-clone pcs constraint order start neutron-server-api-clone then neutron-ovs-cleanup-clone pcs constraint order start neutron-ovs-cleanup-clone then Neutron-netns-cleanup-clone pcs constraint order start Neutron-netns-cleanup-clonethen Neutron-openvswitch-agent-clone pcs constraint order start Neutron-openvswitch-agent-clone then Neutron-dncp-agent-clone pcs constraint order start Neutron-dncp-agent-clone then Neutron-l3-agent-clone

colocation示例;

Colocation约束主要用于根据资源A的节点位置来决定资源B的位置;即在启动资源B的时候;会依赖资源A的节点位置。例如将资源A与资源B进行Colocation约束;假设资源A已经运行在node1上;则资源B也会在node1上启动;而如果node1故障;则资源B与A会同时切换到node2而不是其中某个资源切换到node3。

在Openstack高可用集群配置中;通常需要将Libvirtd-compute与Neutron-openvswitch-agent进行资源捆绑;要将Nova-compute与Libvirtd-compute进行资源捆绑;则 Colocation约束的配置如下;

pcs constraint colocation add nova-compute-clone with libvirtd-compute-clone pcs constraint colocation add libvirtd-compute-clone with neutron-openvswitch-agent-compute-clone

Location约束、Order约束和Colocation约束是Pacemaker集群中最为重要的三个约束通过这几个资源约束设置;集群中看起来彼此独立的资源就会按照预先设置有序运行。

3. 集群资源类型

在Pacemaker集群中;各种功能服务通常被配置为集群资源;从而接受资源管理器的调度与控制;资源是集群管理的最小单位对象。在集群资源配置中;由于不同高可用模式的需求;资源通常被配置为不同的运行模式;例如Active/Active模式、Active/Passive模式以及Master/Master模式和Master/Slave模式;而这些不同资源模式的配置均需要使用Pacemaker提供的高级资源类型;这些资源类型包括资源组、资源克隆和资源多状态等。

1;资源组

在Pacemaker集群中;经常需要将多个资源作为一个资源组进行统一操作;例如将多个相关资源全部位于某个节点或者同时切换到另外的节点;并且要求这些资源按照一定的先后顺序启动;然后以相反的顺序停止;为了简化同时对多个资源进行配置;供了高级资源类型一资源组。通过资源组;用户便可并行配置多个资源。

2;资源克隆

克隆资源是Pacemaker集群中的高级资源类型之一;通过资源克隆;集群管理员可以将资源克隆到多个节点上并在启动时使其并行运行在这些节点上;例如可以通过资源克隆的形式在集群中的多个节点上运行冗余IP资源实例;并在多个处于Active状态的资源之间实现负载均衡。通常而言;凡是其资源代理支持克隆功能的资源都可以实现资源克隆;但需要注意的是;只有己经规划为可以运行在Active/Active高可用模式的资源才能在集群中配置为克隆资源。

配置克隆资源很简单;通常在创建资源的过程中同时对其进行资源克隆;克隆后的资源将会在集群中的全部节点上存在;并且克隆后的资源会自动在其后添加名为clone的后缀并形成新的资源ID;资源创建并克隆资源的语法如下;

pcs resource create resource_id standard:provider: type| type [resource options] --clone[meta clone_options]克隆后的资源ID不再是语法中指定的Resource_id;而是Resource_id-clone并且该资源会在集群全部节点中存在。

在Pacemaker集群中;资源组也可以被克隆;但是资源组克隆不能由单一命令完成;必须先创建资源组然后再对资源组进行克隆;资源组克隆的命令语法如下;

pcs resource clone resource_id group_name [clone_optione] ... 克隆后资源的名称为Resource_id-clone或Group_name-clone在资源克隆命令中;可以指定资源克隆选项(clone_options),如下是常用的资源克隆选项及其意义。

Priority/Target-role/ls-manage;这三个克隆资源属性是从被克隆的资源中继承而来的。

Clone-max;该选项值表示需要存在多少资源副本才能启动资源;默认为该集群中的节点数。

Clone-node-max;表示在单一节点上能够启动多少个资源副本;默认值为1。

Notify;表示在停止或启动克隆资源副本时;是否在开始操作前和操作完成后告知其他所有资源副本;允许值为False和True;默认值为False。

Globally-unique;表示是否允许每个克隆副本资源执行不同的功能;允许值为False和True。如果其值为False,则不管这些克隆副本资源运行在何处;它们的行为都是完全和同的;因此每个节点中有且仅有一个克隆副本资源处于Active状态。其值为True,则运行在某个节点上的多个资源副本实例或者不同节点上的多个副本实例完全不一样。如果Clone-node-max取值大于1;即一个节点上运行多个资源副本;那么Globally-unique的默认值为True;否则为False。Ordered;表示是否顺序启动位于不同节点上的资源副本;True为顺序启动;False为并行启动;默认值是False。

Interleave;该属性值主要用于改变克隆资源或者Masters资源之间的ordering约束行为;Interleave可能的值为True和False,如果其值为False,则位于相同节点上的后一个克隆资源的启动或者停止操作需要等待前一个克隆资源启动或者停止完成才能进行;而如果其值为 True;则后一个克隆资源不用等待前一个克隆资源启动或者停止完成便可进行启动或者停止操作。 Interleave的默认值为False。

在通常情况下;克隆资源会在集群中的每个在线节点上都存在一个副本;即资源副本数目与集群节点数目相等;但是;集群管理员可以通过资源克隆选项Clone-max将资源副本数目设为小于集群节点数目;如果通过设置使得资源副本数目小于节点数目;则需要通过资源位置约束; Location Constraint)将资源副本指定到相应的节点上;设置克隆资源的位置约束与设置常规资源的位置约束类似。

例如要将克隆资源Web-clone限制在node1节点上运行;则命令语法如下;

pcs constraint location web-clone prefers node14. 资源多态

多状态资源是Pacemaker集群中实现资源Master/Master或Master/Slave高可用模式的机制;并且多态资源是一种特殊的克隆资源;多状态资源机制允许资源实例在同一时刻仅处于Master状态或者Slave状态。

多状态资源的创建只需在普通资源创建的过程中指定一Master参数即可;Master/Slave多状态类型资源的创建命令语法如下;

pcs resource create resource_id standard;provider: type| type [resource options] --master [meta master_options]多状态资源是一种特殊的克隆资源;默认情况下;多状态资源创建后也会在集群的全部节点中存在;多状态资源创建后在集群中的资源名称形如Resource_id-master。

需要指出的是;在Master/Slave高可用模式下;尽管在集群中仅有一个节点上的资源会处于Master状态;其他节点上均为Slave状态;但是全部节点上的资源在启动之初均为Slave状态;之后资源管理器会选择将某个节点的资源提升为Master。

另外;用户还可以将已经存在的资源或资源组创建为多状态资源;命令语法如下;

pcs resource master master/slave_name resource_id group_name [master_options]在多状态资源的创建过程中;可以通过Master选项;Master_options)来设置多状态资源的属性;Master_options主要有以下两种属性值;

Master-max;其值表示可将多少个资源副本由Slave状态提升至Master状态;默认值为1;即仅有一个Master。

Master-node-max;其值表示在同一节点中可将多少资源副本提升至Master状态;默认值为1。

在通常情况下;多状态资源默认会在每个在线的集群节点中分配一个资源副本;如果希望资源副本数目少于节点数目;则可通过资源的Location约束指定运行资源副本的集群节点;多状态资源的Location约束在实现的命令语法上与常规资源没有任何不同。此外;在配置多状态资源的Ordering约束时;可以指定对资源进行的操作是提升;Promote;还是降级(Demote)操作;

pcs constraint order [action] resource_id then [action] resource_id [options] Promote操作即是将对应的资源;resource_id)提升为Master状态;Demote操作即是将资源(resource_id)降级为Slave状态;通过ordering约束即可设定被提升或降级资源的顺序。

五、Pacemaker集群部署

参考;ClusterLabs > Pacemaker Documentation

1、Pacemaker集群基础安装配置

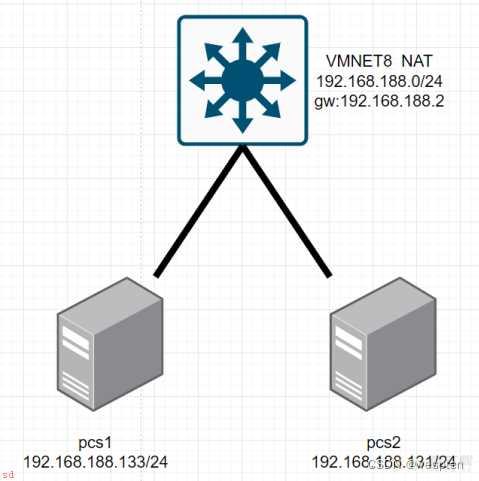

#CentOS-Stream8 hostnamectl set-hostname pcs1 hostnamectl set-hostname pcs2 #防火墙和selinux sed -i ;s/SELINUX=enforcing/SELINUX=permissive/; /etc/selinux/config setenforce 0 systemctl disable firewalld --now rm -rf /etc/yum.repos.d/* cat > /etc/yum.repos.d/iso.repo <<END [AppStream] name=AppStream baseurl=http://mirrors.163.com/centos/8-stream/AppStream/x86_64/os/ enabled=1 gpgcheck=0 [BaseOS] name=BaseOS baseurl=http://mirrors.163.com/centos/8-stream/BaseOS/x86_64/os/ enabled=1 gpgcheck=0 [epel] name=epel baseurl=https://mirrors.tuna.tsinghua.edu.cn/epel/8/Everything/x86_64/ gpgcheck=0 [HA] name=HA baseurl=http://mirrors.163.com/centos/8-stream/HighAvailability/x86_64/os/ gpgcheck=0 END yum clean all yum makecache #yum update #yum install -y pacemaker pcs psmisc policycoreutils-python3 #启动服务 systemctl enable pcsd.service --now #给hacluster用户设置密码 echo ;yydsmm; | passwd hacluster --stdin #添加解析文件 cat > /etc/hosts <<END 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.188.133 pcs1 192.168.188.131 pcs2 END #配置集群使corosync生效(在一个节点上做就可以) pcs host auth pcs1 pcs2 -u hacluster -p yydsmm pcs cluster setup mycluster pcs1 pcs2 #启动集群(在一个节点上做就可以);相当于启动pacemaker和corosync的服务,但是不会开机自启动; pcs cluster start --all #由于开机自启动有可能发生脑裂;所以不设置开机自启动 #查看集群状态 pcs status #关掉fencing功能 pcs property set stonith-enabled=false

2、Pacemaker部署Active/Passive模式

pcs resource create liuzong ocf:heartbeat:IPaddr2 ip=192.168.188.200 cidr_netmask=24 op monitor interval=30s #设置资源的粘性 pcs resource defaults update resource-stickiness=100 3、部署httpd

#两个节点安装httpd yum install -y httpd #设置网页内容 cat <<-END >/var/www/html/index.html <html> <body>My Test Site - $(hostname)</body> </html> END #开启apache的URL状态 cat <<-END >/etc/httpd/conf.d/status.conf <Location /server-status> SetHandler server-status Require local </Location> END #pacemaker接管apache pcs resource create WebSite ocf:heartbeat:apache configfile=/etc/httpd/conf/httpd.conf statusurl=;http://localhost/server-status; op monitor interval=1min pcs constraint colocation add WebSite with liuzong INFINITY pcs constraint order liuzong then WebSite pcs constraint location WebSite prefers pcs2=50 pcs resource move WebSite pcs2 4、Pacemaker;DRBD;NFS部署

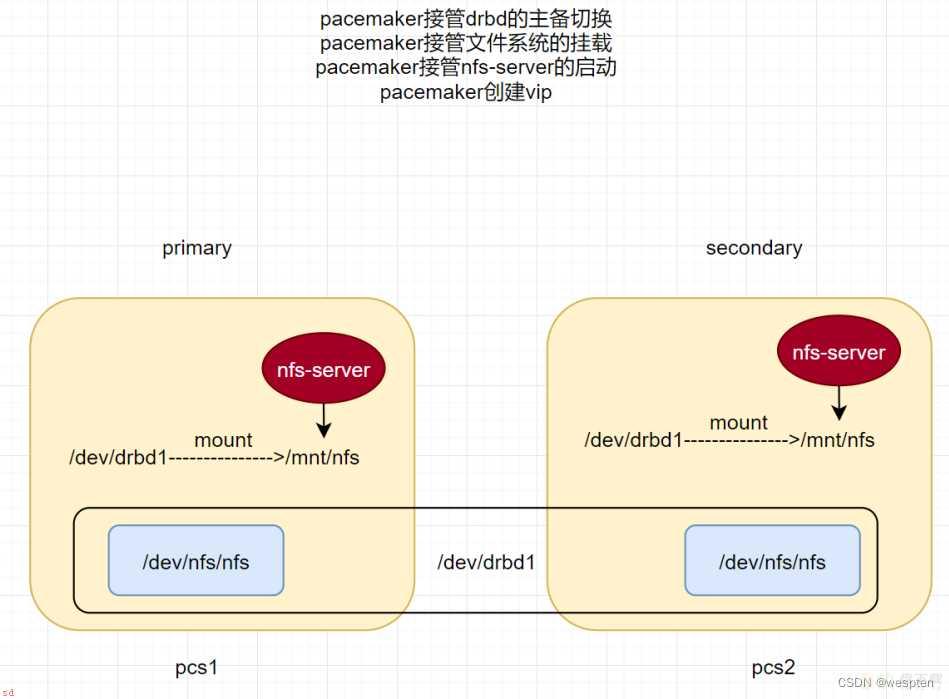

pcs property set stonith-enabled=false pcs resource defaults resource-stickiness=100 yum -y install drbd-pacemaker #获取集群当前的配置文件 pcs cluster cib drbd_cfg pcs -f drbd_cfg resource create nfsdrbd ocf:linbit:drbd drbd_resource=nfs op monitor interval=;29s; role=;Master; op monitor interval=;31s; role=;Slave; op start interval=;0s; timeout=;240s; op stop interval=;0s; timeout=;100s; pcs -f drbd_cfg resource promotable nfsdrbd promoted-max=1 promoted-node-max=1 clone-max=2 clone-node-max=1 notify=true pcs -f drbd_cfg resource create drbdfsnfs Filesystem device=;/dev/drbd1; directory=;/mnt/nfs/; fstype=;xfs; op start interval=;0s; timeout=;60s; op stop interval=;0s; timeout=;60s; op monitor interval=;20s; timeout=;40s; pcs -f drbd_cfg constraint colocation add drbdfsnfs with nfsdrbd-clone INFINITY with-rsc-role=Master pcs -f drbd_cfg constraint order promote nfsdrbd-clone then start drbdfsnfs pcs -f drbd_cfg resource create NFS-Server systemd:nfs-server op monitor interval=;20s; op start interval=;0s; timeout=;40s; op stop interval=;0s; timeout=;20s; pcs -f drbd_cfg constraint colocation add NFS-Server with drbdfsnfs pcs -f drbd_cfg constraint order drbdfsnfs then NFS-Server pcs -f drbd_cfg resource create exportfsdir ocf:heartbeat:exportfs directory=;/mnt/nfs; fsid=;1; clienTSPec=;192.168.188.0/24; unlock_on_stop=;1; options=;rw,sync,no_root_squash; op start interval=;0s; timeout=;40s; op stop interval=;0s; timeout=;120s; op monitor interval=;10s; timeout=;20s; pcs -f drbd_cfg constraint colocation add NFS-Server with exportfsdir pcs -f drbd_cfg constraint order exportfsdir then NFS-Server pcs -f drbd_cfg resource create nfsClusterIP ocf:heartbeat:IPaddr2 ip=192.168.188.200 cidr_netmask=24 op monitor interval=30s pcs -f drbd_cfg constraint order exportfsdir then nfsClusterIP pcs -f drbd_cfg constraint colocation add nfsClusterIP with exportfsdir pcs -f drbd_cfg constraint location nfsdrbd-clone prefers pcs1=INFINITY pcs2=50 pcs cluster cib-push drbd_cfg --config

七、Pacemaker实现KVM HA

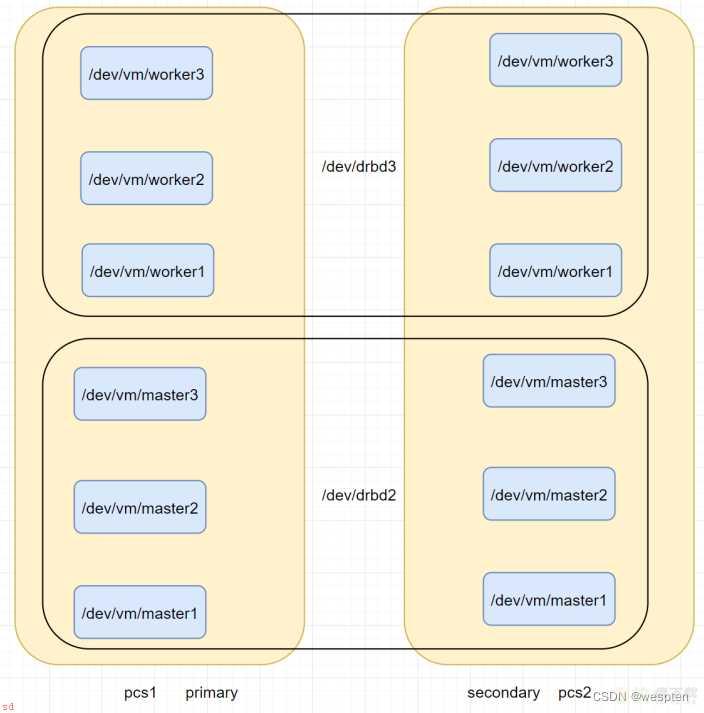

网络拓扑;

1、磁盘分配和drbd创建

pvcreate /dev/sdc vgcreate vm /dev/sdc lvcreate -n master -l50%free vm lvcreate -n worker -l100%free vm #resource配置文件 cat > /etc/drbd.d/master.res <<END resource master { internal; device /dev/drbd2; net { sha256; } on pcs1 { address 192.168.188.133:7791; disk /dev/vm/master; } on pcs2 { address 192.168.188.131:7791; disk /dev/vm/master; } } END cat > /etc/drbd.d/worker.res <<END resource worker { internal; device /dev/drbd3; net { sha256; } on pcs1 { address 192.168.188.133:7792; disk /dev/vm/worker; } on pcs2 { address 192.168.188.131:7792; disk /dev/vm/worker; } } END drbdadm create-md master drbdadm create-md worker drbdadm up master drbdadm up worker #pcs1 drbdadm primary master --force drbdadm primary worker --force #格式化 /dev/drbd2 /dev/drbd3 2、Pacemaker接管drbd

mkdir /mnt/master /mnt/worker

#挂载点需要在两个节点上都创建 pcs cluster cib drbd_cfg pcs -f drbd_cfg resource create masterdrbd ocf:linbit:drbd drbd_resource=master op monitor interval=;29s; role=;Master; op monitor interval=;31s; role=;Slave; op start interval=;0s; timeout=;240s; op stop interval=;0s; timeout=;100s; pcs -f drbd_cfg resource promotable masterdrbd promoted-max=1 promoted-node- max=1 clone-max=2 clone-node-max=1 notify=true pcs -f drbd_cfg resource create drbdfsmaster Filesystem device=;/dev/drbd2; directory=;/mnt/master/; fstype=;xfs; op start interval=;0s; timeout=;60s; op stop interval=;0s; timeout=;60s; op monitor interval=;20s; timeout=;40s; pcs -f drbd_cfg constraint colocation add drbdfsmaster with masterdrbd-clone INFINITY with-rsc-role=Master pcs -f drbd_cfg constraint order promote masterdrbd-clone then start drbdfsmaster pcs cluster cib-push drbd_cfg --config pcs cluster cib drbd_cfg pcs -f drbd_cfg resource create workerdrbd ocf:linbit:drbd drbd_resource=worker op monitor interval=;29s; role=;Master; op monitor interval=;31s; role=;Slave; op start interval=;0s; timeout=;240s; op stop interval=;0s; timeout=;100s; pcs -f drbd_cfg resource promotable workerdrbd promoted-max=1 promoted-node- max=1 clone-max=2 clone-node-max=1 notify=true pcs -f drbd_cfg resource create drbdfsworker Filesystem device=;/dev/drbd3; directory=;/mnt/worker/; fstype=;xfs; op start interval=;0s; timeout=;60s; op stop interval=;0s; timeout=;60s; op monitor interval=;20s; timeout=;40s; pcs -f drbd_cfg constraint colocation add drbdfsworker with workerdrbd-clone INFINITY with-rsc-role=Master pcs -f drbd_cfg constraint order promote workerdrbd-clone then start drbdfsworker pcs cluster cib-push drbd_cfg --config pcs constraint location masterdrbd-clone prefers pcs1=INFINITY pcs2=50 pcs constraint location workerdrbd-clone prefers pcs1=INFINITY pcs2=50 3、在pcs1上部署VM

#安装KVM(两个节点;必须开启cpu虚拟化) yum -y install qemu-kvm libvirt libvirt-client libguestfs-tools virt-install systemctl enable libvirtd --now #primary节点 wget https://cloud.centos.org/centos/8-stream/x86_64/images/CentOS-Stream-GenericCloud-8-20220125.1.x86_64.qcow2 [root;pcs1 ~]# mv CentOS-Stream-GenericCloud-8-20220125.1.x86_64.qcow2 /mnt/master/ [root;pcs1 ~]# cd /mnt/master/ virt-customize -a ./CentOS-Stream-GenericCloud-8-20220125.1.x86_64.qcow2 --root-password password:redhat123 --selinux-relabel [root;pcs1 master]# mv CentOS-Stream-GenericCloud-8-20220125.1.x86_64.qcow2 os.qcow2 for i in {1..3} do cp /mnt/master/os.qcow2 /mnt/master/master${i}.qcow2 cp /mnt/master/os.qcow2 /mnt/worker/worker${i}.qcow2 done #创建bridge nmcli con add con-name vm-bridge ifname vm-bridge type bridge ipv4.method disable ipv6.method ignore nmcli con add con-name ens33-slave ifname ens33 type ethernet slave-type bridge master vm-bridge nmcli con up [root;pcs1 ~]# bridge link 4: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master vm-bridge state learning priority 32 cost 100 nmcli con add con-name vm-bridge ifname vm-bridge type bridge ipv4.method disable ipv6.method ignore nmcli con add con-name ens38-slave ifname ens38 type ethernet slave-type bridge master vm-bridge nmcli con up ens38-slave #master虚拟机分配1C2G;worker虚拟机分配1C1G for i in {1..3} do virt-install --name master${i} --memory 2048 --vcpus 1 --import --os-variant centos-stream8 --disk path=/mnt/master/master${i}.qcow2,bus=virtio --network bridge=vm-bridge,model=virtio --noautoconsole done for i in {1..3} do virt-install --name worker${i} --memory 1024 --vcpus 1 --import --os-variant centos-stream8 --disk path=/mnt/worker/worker${i}.qcow2,bus=virtio --network bridge=vm-bridge,model=virtio --noautoconsole done #由于部署完vm之后;会产生xml的qemu虚拟机描述文件;我们需要保存这个xml文件 [root;pcs1 ~]# ls /etc/libvirt/qemu/ master1.xml master2.xml master3.xml networks worker1.xml worker2.xml worker3.xml #如果你不保存这些xml文件;那么当你destroy之后;再undefine;xml文件就会被删除 for i in {1..3} do cp /etc/libvirt/qemu/master${i}.xml /mnt/master/ cp /etc/libvirt/qemu/worker${i}.xml /mnt/worker/ done for i in ;virsh list | grep -i running | awk ;{print $2};; do virsh destroy $i virsh undefine $i done [root;pcs1 ~]# ls /etc/libvirt/qemu/ networks 4、Pacemaker接管KVM虚拟机

pcs resource describe ocf:heartbeat:VirtualDomain pcs cluster cib drbd_cfg pcs -f drbd_cfg resource create k8s-master1 ocf:heartbeat:VirtualDomain hypervisor=;qemu:///system; config=;/mnt/master/master1.xml; op start timeout=;240s; on-fail=restart op stop timeout=;120s; op monitor timeout=;30s; interval=;30; pcs -f drbd_cfg constraint colocation add k8s-master1 with drbdfsmaster pcs -f drbd_cfg constraint order drbdfsmaster then start k8s-master1 pcs -f drbd_cfg resource create k8s-master2 ocf:heartbeat:VirtualDomain hypervisor=;qemu:///system; config=;/mnt/master/master2.xml; op start timeout=;240s; on-fail=restart op stop timeout=;120s; op monitor timeout=;30s; interval=;30; pcs -f drbd_cfg constraint colocation add k8s-master2 with drbdfsmaster pcs -f drbd_cfg constraint order drbdfsmaster then start k8s-master2 pcs -f drbd_cfg resource create k8s-master3 ocf:heartbeat:VirtualDomain hypervisor=;qemu:///system; config=;/mnt/master/master3.xml; op start timeout=;240s; on-fail=restart op stop timeout=;120s; op monitor timeout=;30s; interval=;30; pcs -f drbd_cfg constraint colocation add k8s-master3 with drbdfsmaster pcs -f drbd_cfg constraint order drbdfsmaster then start k8s-master3 pcs -f drbd_cfg resource create k8s-worker1 ocf:heartbeat:VirtualDomain hypervisor=;qemu:///system; config=;/mnt/worker/worker1.xml; op start timeout=;240s; on-fail=restart op stop timeout=;120s; op monitor timeout=;30s; interval=;30; pcs -f drbd_cfg constraint colocation add k8s-worker1 with drbdfsworker pcs -f drbd_cfg constraint order drbdfsworker then start k8s-worker1 pcs -f drbd_cfg resource create k8s-worker2 ocf:heartbeat:VirtualDomain hypervisor=;qemu:///system; config=;/mnt/worker/worker2.xml; op start timeout=;240s; on-fail=restart op stop timeout=;120s; op monitor timeout=;30s; interval=;30; pcs -f drbd_cfg constraint colocation add k8s-worker2 with drbdfsworker pcs -f drbd_cfg constraint order drbdfsworker then start k8s-worker2 pcs -f drbd_cfg resource create k8s-worker3 ocf:heartbeat:VirtualDomain hypervisor=;qemu:///system; config=;/mnt/worker/worker3.xml; op start timeout=;240s; on-fail=restart op stop timeout=;120s; op monitor timeout=;30s; interval=;30; pcs -f drbd_cfg constraint colocation add k8s-worker3 with drbdfsworker pcs -f drbd_cfg constraint order drbdfsworker then start k8s-worker3 pcs cluster cib-push drbd_cfg --config pcs resource delete k8s-master1 --force pcs resource delete k8s-master2 --force pcs resource delete k8s-master3 --force pcs resource delete k8s-worker1 --force pcs resource delete k8s-worker2 --force pcs resource delete k8s-worker3 --force [root;pcs1 ~]# pcs resource debug-start k8s-master1 八、Pecemaker;Corosync;Haproxy实现Openstack HA

1、高可用集群环境准备

- pecemaker&&corosync&&haproxy

- mariadb&&rabbitmq

- openstack controller&&compute&&cinder

机器准备工作;

物理主机集群a (172.27.10.11, 172.27.10.12, 172.27.10.13)

pecemaker&&corosync&&haproxy HA cluster

mariadb&&rabbitmq HA cluster

物理主机集群b (172.27.10.21, 172.27.10.22, 172.27.10.23)

openstack controller HA cluster

物理主机集群c (172.27.10.31, 172.27.10.32)

openstack compute HA cluster

物理主机集群d (172.27.10.41, 172.27.10.42)

openstack cinder HA cluster

Vip (172.27.10.51)

2、非openstack集群搭建

环境准备 (172.27.10.11, 172.27.10.12, 172.27.10.13)

system: centos7

1. 关闭 selinux 防火墙

systemctl stop firewalld.servicesystemctl disable firewalld.service --state sed -i ;/^SELINUX=.*/c SELINUX=disabled; /etc/selinux/config sed -i ;s/^SELINUXTYPE=.*/SELINUXTYPE=disabled/g; /etc/selinux/config grep --color=auto ;^SELINUX; /etc/selinux/config setenforce 0 2. 分别修改主机名称

# hostnamectl set-hostname ha1 3. 时间同步

/usr/sbin/ntpdate ntp6.aliyun.com

echo ;*/3 * * * * /usr/sbin/ntpdate ntp6.aliyun.com &> /dev/null; > /tmp/crontab crontab /tmp/crontab # ssh免密验证 ############################# #配置/etc/hosts; 172.27.10.11 ha1 172.27.10.12 ha2 172.27.10.13 ha3 #ha1设置 ssh-keygen -t rsa -P ;; -f ~/.ssh/id_dsa ssh-copy-id -i ~/.ssh/id_dsa.pub root;ha2 ssh-copy-id -i ~/.ssh/id_dsa.pub root;ha3 4. 升级系统至最新版本

yum update -y 5. Corosync&&Pecemaker集群搭建

#下载pacemaker组件的repo文件;cd /etc/yum.repos.d wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-7/network:ha-clustering:Stable.repo #安装相关软件; yum install -y wget yum install -y yum-plugin-priorities yum install -y openstack-selinux yum install -y ntp yum install -y pacemaker corosync resource-agents yum install -y crmsh cluster-glue 配置corosync。

corosync.conf请备份再编辑;

# vi /etc/corosync/corosync.conf totem { version: 2 token: 10000 token_retransmits_before_loss_const: 10 secauth: off rrp_mode: active interface { ringnumber: 0 bindnetaddr: 172.27.10.0 broadcast: yes mcastport: 5405 ttl: 1 } transport: udpu } nodelist { node { ring0_addr: 172.27.10.11 } node { ring0_addr: 172.27.10.12 } node { ring0_addr: 172.27.10.13 } } logging { fileline: off to_stderr: no to_logfile: yes logfile: /var/log/cluster/corosync.log to_syslog: yes debug: off timestamp: on logger_subsys { subsys: QUORUM debug: off } } quorum { provider: corosync_votequorum two_node: 1 wait_for_all: 1 last_man_standing: 1 last_man_standing_window: 10000 } 每个节点都要编辑corosync.conf;在每个节点上都启动corosync服务;

systemctl enable corosync.servicesystemctl start corosync.service 在任一节点查看corosync服务的状态;

corosync-cmapctl runtime.totem.pg.mrp.srp.members

runtime.totem.pg.mrp.srp.members.167772172.config_version (u64) = 0 runtime.totem.pg.mrp.srp.members.167772172.ip (str) = r(0) ip(172.27.10.11) runtime.totem.pg.mrp.srp.members.167772172.join_count (u32) = 1 runtime.totem.pg.mrp.srp.members.167772172.status (str) = joined runtime.totem.pg.mrp.srp.members.167772173.config_version (u64) = 0 runtime.totem.pg.mrp.srp.members.167772173.ip (str) = r(0) ip(172.27.10.12) runtime.totem.pg.mrp.srp.members.167772173.join_count (u32) = 2 runtime.totem.pg.mrp.srp.members.167772173.status (str) = joined runtime.totem.pg.mrp.srp.members.167772174.config_version (u64) = 0 runtime.totem.pg.mrp.srp.members.167772174.ip (str) = r(0) ip(172.27.10.13) runtime.totem.pg.mrp.srp.members.167772174.join_count (u32) = 2 runtime.totem.pg.mrp.srp.members.167772173.status (str) = joined #167772172是member id;其IP是172.27.10.11;状态是joined #167772173是member id;其IP是172.27.10.12;状态是joined #167772173是member id;其IP是172.27.10.13;状态是joined #corosync服务状态正确 在两个节点上都启动pacemaker服务;

systemctl enable pacemaker.servicesystemctl start pacemaker.service 查看服务启动状态;

crm_monStack: corosync Current DC: ha1 (167772173) - partition with quorum Version: 1.1.12-a14efad 3 Nodes configured 0 Resources configured Online: [ ha1 ha2 ha3 ] #pacemaker服务状态很好。如果状态不好;会出现“脑裂”现象。 #即在ha1 ha2 ha3分别运行crm_mon;看到的Current DC不是统一的;而是各自本身。 #出现此问题其中一种可能的原因是开启了防火墙。 #配置corosync和pecemaker #在任一节点执行“crm configure”命令; crm configure property no-quorum-policy=;ignore; pe-warn-series-max=;1000; pe-input-series-max=;1000; pe-error-series-max=;1000; cluster-recheck-interval=;5min; #默认的表决规则建议集群中的节点个数为奇数且不低于3。当集群只有2个节点;其中1个节点崩坏;由于不符合默认的表决规则;集群资源不发生转移;集群整体仍不可用。 #no-quorum-policy=;ignore;可以解决此双节点的问题;但不要用于生产环境。换句话说;生产环境还是至少要3节点。 #pe-warn-series-max、pe-input-series-max、pe-error-series-max代表日志深度。 #cluster-recheck-interval是节点重新检查的频率。 #禁用stonith; crm configure property stonith-enabled=false #stonith是一种能够接受指令断电的物理设备;测试环境无此设备;如果不关闭该选项;执行crm命令总是含其报错信息。 #查看配置; crm configure show # corosync和pacemaker状态无误;就能创建VIP资源了。我的VIP是“172.27.10.51”; crm configure primitive myvip ocf:heartbeat:IPaddr2 params ip=;172.27.10.51; cidr_netmask=;24; op monitor interval=;30s; 6. Haproxy集群搭建

# yum install -y haproxy haproxy.cfg请备份再编辑;

# vi /etc/haproxy/haproxy.cfg global chroot /var/lib/haproxy daemon group haproxy maxconn 4000 pidfile /var/run/haproxy.pid user haproxy defaults log global maxconn 4000 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout check 10s listen haproxy_status bind 172.27.105.191:6677 mode http log global stats enable stats refresh 5s stats realm Haproxy Statistics stats uri /haproxy_stats stats hide-version stats auth admin:admin 每个节点都要编辑haproxy.cfg。

由于需要将集群资源绑定到VIP;需要修改各节点的内核参数;

echo ;net.ipv4.ip_nonlocal_bind = 1;>>/etc/sysctl.conf sysctl -p 如果在启动haproxy时出现了无法绑定vip端口的情况请执行;

setsebool -P haproxy_connect_any=1 在集群中增加haproxy服务资源;

# crm configure primitive haproxy lsb:haproxy op monitor interval=;30s;:haproxy;表示haproxy服务。 ERROR:lsb:haproxy:got no meta-data, does this RA exist? ERROR:lsb:haproxy:got no meta-data, does this RA exist? ERROR:lsb:haproxy:no such resource agent Do you still want to commit (y/n)? n #似乎crm命令不识别“haproxy”服务。查看crm目前能识别哪些服务; # crm ra list lsb netconsole network #netconsole和network位于/etc/rc.d/init.d目录中;是centos7默认情况下仅有的服务脚本;推测在此目录创建haproxy的服务脚本即可;每个节点均要;; # vi /etc/rc.d/init.d/haproxy #内容如下; #!/bin/bash case ;$1; in start) systemctl start haproxy.service ;; stop) systemctl stop haproxy.service ;; status) systemctl status -l haproxy.service ;; restart) systemctl restart haproxy.service ;; *) echo ;$1 = start|stop|status|restart; ;; esac #记得授予可执行权限; chmod 755 /etc/rc.d/init.d/haproxy #再次确认crm命令是否能识别“haproxy”; # crm ra list lsb haproxy netconsole network #已经有了haproxy;“service haproxy status”命令也能用了;请再次尝试创建haproxy服务资源。 #查看资源状态; # crm_mon Stack: corosync Current DC: ha1 (167772172) - partition with quorum Version: 1.1.12-a14efad 3 Nodes configured 2 Resources configured Online: [ ha1 ha2 ha3 ] myvip (ocf::heartbeat:IPaddr2): Started ha1 haproxy (lsb:haproxy): Started ha2 #目前haproxy资源在节点ha2上;在ha2上查看haproxy服务状态;已是active; # systemctl status -l haproxy.service #定义HAProxy和VIP必须在同一节点上运行; # crm configure colocation haproxy-with-vip INFINITY: haproxy myvip #定义先接管VIP之后才启动HAProxy; # crm configure order haproxy-after-vip mandatory: myvip haproxy #再次查看配置发现vip资源和haproxy已经在一个节点 Stack: corosync Current DC: controller2 (167772172) - partition with quorum Version: 1.1.12-a14efad 3 Nodes configured 2 Resources configured Online: [ ha1 ha2 ha3 ] myvip (ocf::heartbeat:IPaddr2): Started ha1 haproxy (lsb:haproxy): Started ha1 #测试haproxy和vip的宕机节点漂移 crm node standby #standby node crm node online #online node 7. Mariadb galera集群搭建

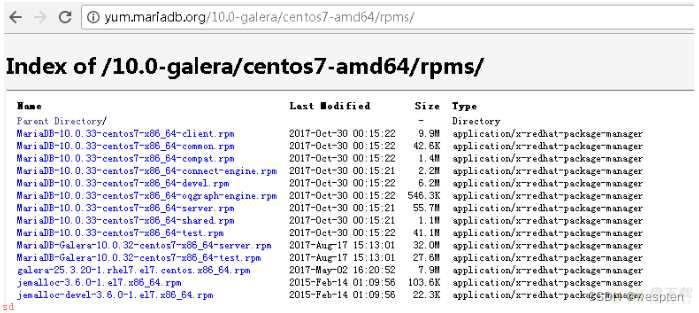

Index of /10.0-galera/centos7-amd64/rpms/ 我并没有给centos配置yum源而是直接去mariadb源上下载了需要的rpm包,我选择的版本是10.0.0

主要需要的包为:

MariaDB-10.0.33-centos7-x86_64-client.rpm

MariaDB-10.0.33-centos7-x86_64-common.rpm

MariaDB-10.0.33-centos7-x86_64-devel.rpm

galera-25.3.20-1.rhel7.el7.centos.x86_64.rpm

MariaDB-Galera-10.0.32-centos7-x86_64-server.rpm

安装前请更新本地yum,因为安装common时centos自带的mariadb-libs版本比较低;

yum install -y xinetd rsync expectyum update -y || yum remove mariadb-libs yum install -y MariaDB-10.0.33-centos7-x86_64-common.rpm yum install -y MariaDB-10.0.33-centos7-x86_64-client.rpm yum install -y MariaDB-10.0.33-centos7-x86_64-devel.rpm yum install -y galera-25.3.20-1.rhel7.el7.centos.x86_64.rpm yum install -y MariaDB-Galera-10.0.32-centos7-x86_64-server.rpm #启动mariadb 在3个节点初始化 service mysql start mysql_secure_installation -f /usr/bin/expect ]] || { yum install expect -y; } << EOF set timeout 30 spawn mysql_secure_installation expect { for none; { send ; exp_continue} { send ;Y ; exp_continue} :; { send ;root exp_continue} password:; { send ;root exp_continue} { send ;Y ; exp_continue} eof { exit } } EOF #给mysql建立haproxy用户 密码是空 #编辑mariadb配置文件,支持galera, 在server.cnf [mysqld]追加配置 vi /etc/my.cnf.d/server.cnf ################################ ...... [mysqld] ...... binlog_format=ROW =innodb innodb_autoinc_lock_mode=2 innodb_locks_unsafe_for_binlog=1 query_cache_size=0 query_cache_type=0 =ha1 wsrep_provider=/usr/lib64/galera/libgalera_smm.so wsrep_cluster_name=;my_wsrep_cluster; wsrep_cluster_address=;gcomm://ha1,ha2,ha3; wsrep_slave_threads=1 wsrep_certify_nonPK=1 wsrep_max_ws_rows=131072 wsrep_max_ws_size=1073741824 wsrep_debug=0 wsrep_convert_LOCK_to_trx=0 wsrep_retry_autocommit=1 wsrep_auto_increment_control=1 wsrep_drupal_282555_workaround=0 wsrep_causal_reads=0 wsrep_notify_cmd= wsrep_sst_method=rsync wsrep_sst_auth=root:root ...... ################################ #在其中一个节点以--wsrep-new-cluster方式启动mariadb mysqld_safe --defaults-file=/etc/my.cnf.d/server.cnf --user=mysql --wsrep-new-cluster & #在其他节点启动mariadb mysqld_safe --defaults-file=/etc/my.cnf.d/server.cnf --user=mysql & #在任意节点查看集群状态wsrep_cluster_size = 3 表示正常 MariaDB [(none)]> SHOW STATUS LIKE ;wsrep_cluster_size;; ;--------------------;-------; Variable_name | Value | ;--------------------;-------; wsrep_cluster_size | 3 | ;--------------------;-------; #新建openstack数据库和用户 create database keystone; grant all privileges on keystone.* to ;keystone;;;localhost; identified by ;keystone;; grant all privileges on keystone.* to ;keystone;;% identified by ;keystone;; create database glance; grant all privileges on glance.* to ;glance;;;localhost; identified by ;glance;; grant all privileges on glance.* to ;glance;;% identified by ;glance;; create database nova; grant all privileges on nova.* to ;nova;;;localhost; identified by ;nova;; grant all privileges on nova.* to ;nova;;% identified by ;nova;; create database nova_api; grant all privileges on nova_api.* to ;nova;;;localhost; identified by ;nova;; grant all privileges on nova_api.* to ;nova;;% identified by ;nova;; create database nova_cell0; grant all privileges on nova_cell0.* to ;nova;;;localhost; identified by ;nova;; grant all privileges on nova_cell0.* to ;nova;;% identified by ;nova;; create database neutron; grant all privileges on neutron.* to ;neutron;;;localhost; identified by ;neutron;; grant all privileges on neutron.* to ;neutron;;% identified by ;neutron;; create database cinder; grant all privileges on cinder.* to ;cinder;;;localhost; identified by ;cinder;; grant all privileges on cinder.* to ;cinder;;% identified by ;cinder;; flush privileges; #配置haproxy #修改每个节点的haproxy的配置文件 支持mysql echo ; # mariadb galera listen mariadb_cluster mode tcp bind myvip:3306 balance leastconn option mysql-check user haproxy server ha1 ha1:3306 weight 1 check inter 2000 rise 2 fall 5 server ha2 ha2:3306 weight 1 check inter 2000 rise 2 fall 5 server ha3 ha3:3306 weight 1 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg #如果出现;脑裂;问题: ERROR 1047 (08S01): WSREP has not yetprepared node for application use # 执行: set global wsrep_provider_options=;pc.bootstrap=true;; 8. Rabbit MQ集群搭建

#RabbitMQ Cluster群集安装配置yum install rabbitmq-server erlang socat -y #systemctl enable rabbitmq-server.service # echo ; # NODE_PORT=5672 # NODE_IP_ADDRESS=0.0.0.0;>/etc/rabbitmq/rabbitmq-env.conf #指定端口 systemctl start rabbitmq-server.service enable rabbitmq_management #启动web插件端口15672 netstat -antp|egrep ;567; #ha1节点 #拷贝到其它节点;统一认证的Erlang Cookie scp /var/lib/rabbitmq/.erlang.cookie ha2:/var/lib/rabbitmq/ scp /var/lib/rabbitmq/.erlang.cookie ha3:/var/lib/rabbitmq/ #rabbitmqctl status #使用Disk模式 systemctl stop rabbitmq-server.service pkill beam.smp rabbitmqctl stop -detached rabbitmqctl cluster_status #查看状态 #ha2、ha3执行加入节点ha1 systemctl stop rabbitmq-server.service pkill beam.smp -detached rabbitmqctl stop_app rabbitmqctl join_cluster rabbit;ha1 rabbitmqctl start_app rabbitmqctl cluster_status #任意一个节点上执行 rabbitmqctl set_policy ha-all ;^; ;{;ha-mode;:;all;}; #设置镜像队列 rabbitmqctl set_cluster_name RabbitMQ-Cluster #更改群集名称 rabbitmqctl cluster_status #查看群集状态 Cluster status of node rabbit;ha1... [{nodes,[{disc,[rabbit;ha1,rabbit;ha2,rabbit;ha3]}]}, {running_nodes,[rabbit;ha3,rabbit;ha2,rabbit;ha1]}, {cluster_name,<<;RabbitMQ-Cluster;>>},; {partitions,[]}, {alarms,[{rabbit;ha3,[]}, {rabbit;ha2,[]}, {rabbit;ha1,[]}]}] #重置: rabbitmqctl stop_app ; rabbitmqctl reset #rabbitmq-plugins list #查看支持的插件 #systemctl restart rabbitmq-server.service #配置haproxy #修改每个节点的haproxy的配置文件 支持rabbitmq echo ; #RabbitMQ listen RabbitMQ-Server bind myvip:5673 mode tcp balance roundrobin option tcpka timeout client 3h timeout server 3h option clitcpka server controller2 ha1:5672 check inter 5s rise 2 fall 3 server controller3 ha2:5672 check inter 5s rise 2 fall 3 server controller4 ha3:5672 check inter 5s rise 2 fall 3 listen RabbitMQ-Web bind myvip:15673 mode tcp balance roundrobin option tcpka server controller2 ha1:15672 check inter 5s rise 2 fall 3 server controller3 ha2:15672 check inter 5s rise 2 fall 3 server controller4 ha3:15672 check inter 5s rise 2 fall 3 ;>>/etc/haproxy/haproxy.cfg 9. Keystone Controller Cluser集群

#SQL上创建数据库并授权################################################ ##以下在所有controller节点执行(172.27.10.21,172.27.10.22,172.27.10.23) # ssh免密验证 ############################# #配置/etc/hosts; controller1 controller2 controller3 #ha1设置 -t rsa -P ;; -f ~/.ssh/id_dsa -i ~/.ssh/id_dsa.pub root;controller2 -i ~/.ssh/id_dsa.pub root;controller3 #Keystone安装 yum install -y openstack-keystone httpd mod_wsgi memcached python-memcached yum install apr apr-util -y #memcached启动 cp /etc/sysconfig/memcached{,.bak} sed -i ;s/127.0.0.1/0.0.0.0/; /etc/sysconfig/memcached systemctl enable memcached.service systemctl start memcached.service netstat -antp|grep 11211 #apache配置 #cp /etc/httpd/conf/httpd.conf{,.bak} #echo ;ServerName controller1;>>/etc/httpd/conf/httpd.conf ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ #群集设置;修改默认端口5000,35357(默认端口给群集vip使用) cp /usr/share/keystone/wsgi-keystone.conf{,.bak} sed -i ;s/5000/4999/; /usr/share/keystone/wsgi-keystone.conf sed -i ;s/35357/35356/; /usr/share/keystone/wsgi-keystone.conf #Apache HTTP 启动并设置开机自启动 systemctl enable httpd.service systemctl restart httpd.service netstat -antp|egrep ;httpd; # systemctl disable #haproxy高可用 echo ; #keystone listen keystone_admin_cluster bind myvip:35357 #balance source option tcpka option httpchk option tcplog server controller1 controller1:35356 check inter 2000 rise 2 fall 5 server controller2 controller2:35356 check inter 2000 rise 2 fall 5 server controller3 controller3:35356 check inter 2000 rise 2 fall 5 listen keystone_public_cluster bind myvip:5000 #balance source option tcpka option httpchk option tcplog server controller1 controller1:4999 check inter 2000 rise 2 fall 5 server controller2 controller2:4999 check inter 2000 rise 2 fall 5 server controller3 controller3:4999 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg systemctl restart haproxy.service netstat -antp|egrep ;haproxy|httpd; #登录haproxy web查看Keystone状态全部为down;下面配置后才UP ################################################ ##以下在controller1节点执行 #Keystone 配置 cp /etc/keystone/keystone.conf{,.bak} #备份默认配置 Keys=$(openssl rand -hex 10) #生成随机密码 echo $Keys echo ;kestone $Keys;>~/openstack.log echo ; [DEFAULT] admin_token = $Keys verbose = true [database] connection = mysql;pymysql://keystone:keystone;myvip/keystone [token] provider = fernet driver = memcache [cache] enabled = true backend = oslo_cache.memcache_pool memcached_servers = controller1:11211,controller2:11211,controller3:11211 ;>/etc/keystone/keystone.conf #初始化keystone数据库 su -s /bin/sh -c ;keystone-manage db_sync; keystone #检查表是否创建成功 mysql -h myvip-ukeystone -pkeystone -e ;use keystone;show tables;; #初始化Fernet密匙 fernet_setup --keystone-user keystone --keystone-group keystone #同步配置到其它节点(用scp会改变属性) rsync -avzP -e ;ssh -p 22; /etc/keystone/* controller2:/etc/keystone/ rsync -avzP -e ;ssh -p 22; /etc/keystone/* controller3:/etc/keystone/ #重启http systemctl restart httpd.service ssh controller2 ;systemctl restart httpd.service; ssh controller3 ;systemctl restart httpd.service; # #检测服务否正常 curl http://controller3:35356/v3 #查看单个节点 curl http://myvip:35357/v3 #查看群集代理 #设置admin用户;管理用户;和密码,服务实体和API端点 bootstrap --bootstrap-password admin http://myvip:35357/v3/ http://myvip:5000/v3/ http://myvip:5000/v3/ RegionOne #配置haproxy #修改每个节点的haproxy的配置文件 支持keystone echo ; #keystone listen keystone_admin_cluster bind myvip:35357 #balance source option tcpka option httpchk option tcplog server controller1 controller1:35356 check inter 2000 rise 2 fall 5 server controller2 controller2:35356 check inter 2000 rise 2 fall 5 server controller3 controller3:35356 check inter 2000 rise 2 fall 5 listen keystone_public_cluster bind myvip:5000 #balance source option tcpka option httpchk option tcplog server controller1 controller1:4999 check inter 2000 rise 2 fall 5 server controller2 controller2:4999 check inter 2000 rise 2 fall 5 server controller3 controller3:4999 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg #创建 OpenStack 客户端环境脚本 #admin环境脚本 echo ; export OS_PROJECT_DOMAIN_NAME=default export OS_USER_DOMAIN_NAME=default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=admin export OS_AUTH_URL=http://myvip:35357/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 ;>./admin-openstack.sh #测试脚本是否生效 source ./admin-openstack.sh openstack token issue #创建service项目,创建glance,nova,neutron用户;并授权 openstack project create --domain default --description ;Service Project; service openstack user create --domain default --password=glance glance openstack role add --project service --user glance admin openstack user create --domain default --password=nova nova openstack role add --project service --user nova admin openstack user create --domain default --password=neutron neutron openstack role add --project service --user neutron admin #创建demo项目(普通用户密码及角色) openstack project create --domain default --description ;Demo Project; demo openstack user create --domain default --password=demo demo openstack role create user openstack role add --project demo --user demo user #demo环境脚本 echo ; export OS_PROJECT_DOMAIN_NAME=default export OS_USER_DOMAIN_NAME=default export OS_PROJECT_NAME=demo export OS_USERNAME=demo export OS_PASSWORD=demo export OS_AUTH_URL=http://myvip:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 ;>./demo-openstack.sh #测试脚本是否生效 source ./demo-openstack.sh openstack token issue 10. Glance Controller Cluser集群搭建

#Glance 镜像服务群集###############以下在controller1节点执行 #Glance群集需要使用共享存储;用来存储镜像文件;这里以NFS为例 #先在controller1节点配置;然后拷贝配置到其它节点controller2,controller3 #创建Glance数据库、用户、认证;前面已设置 # keystone上服务注册 ,创建glance服务实体,API端点;公有、私有、admin; source ./admin-openstack.sh || { echo ;加载前面设置的admin-openstack.sh环境变量脚本;;exit; } openstack service create --name glance --description ;OpenStack Image; image openstack endpoint create --region RegionOne image public http://myvip:9292 openstack endpoint create --region RegionOne image internal http://myvip:9292 openstack endpoint create --region RegionOne image admin http://myvip:9292 # Glance 安装 yum install -y openstack-glance python-glance #配置 cp /etc/glance/glance-api.conf{,.bak} cp /etc/glance/glance-registry.conf{,.bak} # images默认/var/lib/glance/images/ Imgdir=/date/glance mkdir -p $Imgdir chown glance:nobody $Imgdir echo ;镜像目录; $Imgdir; echo ;# [DEFAULT] debug = False verbose = True bind_host = controller1 bind_port = 9292 auth_region = RegionOne registry_client_protocol = http [database] connection = mysql;pymysql://glance:glance;myvip/glance [keystone_authtoken] auth_uri = http://myvip:5000/v3 auth_url = http://myvip:35357/v3 memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = glance [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /date/glance [oslo_messaging_rabbit] rabbit_userid =openstack rabbit_password = openstack rabbit_durable_queues=true rabbit_ha_queues = True rabbit_max_retries=0 rabbit_port = 5673 rabbit_hosts = myvip:5673 #;>/etc/glance/glance-api.conf # echo ;# [DEFAULT] debug = False verbose = True bind_host = controller1 bind_port = 9191 workers = 2 [database] connection = mysql;pymysql://glance:glance;myvip/glance [keystone_authtoken] auth_uri = http://myvip:5000/v3 auth_url = http://myvip:35357/v3 memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = glance [paste_deploy] flavor = keystone [oslo_messaging_rabbit] rabbit_userid =openstack rabbit_password = openstack rabbit_durable_queues=true rabbit_ha_queues = True rabbit_max_retries=0 rabbit_port = 5673 rabbit_hosts = myvip:5673 #;>/etc/glance/glance-registry.conf #同步数据库,检查数据库 su -s /bin/sh -c ;glance-manage db_sync; glance mysql -h controller -u glance -pglance -e ;use glance;show tables;; #启动服务并设置开机自启动 systemctl enable openstack-glance-api openstack-glance-registry systemctl restart openstack-glance-api openstack-glance-registry sleep 3 netstat -antp|grep python2 #检测服务端口 #netstat -antp|egrep ;9292|9191; #检测服务端口 #配置haproxy #修改每个节点的haproxy的配置文件 支持glance echo ; #glance_api_cluster listen glance_api_cluster bind 172.27.105.191:9292 #balance source option tcpka option httpchk option tcplog server controller1 controller1:9292 check inter 2000 rise 2 fall 5 server controller2 controller2:9292 check inter 2000 rise 2 fall 5 server controller3 controller3:9292 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg #镜像测试,下载有时很慢 wget http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img #下载测试镜像源 #使用qcow2磁盘格式;bare容器格式,上传镜像到镜像服务并设置公共可见 source ./admin-openstack.sh openstack image create ;cirros; cirros-0.3.5-x86_64-disk.img qcow2 --container-format bare --public #检查是否上传成功 openstack image list ls $Imgdir #删除镜像 glance image-delete 镜像id ############### 配置其它节点controller2、controller3############### #以下操作同样是在controller1执行 ############ #把controller1节点的glance镜像通过NFS共享给其它节点(仅测试环境使用) #NFS 服务端 (centos7) yum install nfs-utils rpcbind -y echo ;/date/glance 172.27.10.0/24(rw,no_root_squash,sync);>>/etc/exports exportfs -r systemctl enable rpcbind nfs-server systemctl start rpcbind nfs-server showmount -e localhost #controller2、controller3 作为 NFS客户端 ssh controller2 ;systemctl enable rpcbind;systemctl start rpcbind; ssh controller2 ;mkdir -p /date/glance;mount -t nfs controller1:/date/glance /date/glance; ssh controller2 ;echo ;/usr/bin/mount -t nfs controller1:/date/glance /date/glance;>>/etc/rc.local;df -h; ssh controller3 ;systemctl enable rpcbind;systemctl start rpcbind; ssh controller3 ;mkdir -p /date/glance;mount -t nfs controller1:/date/glance /date/glance; ssh controller3 ;echo ;/usr/bin/mount -t nfs controller1:/date/glance /date/glance;>>/etc/rc.local;df -h; ############ # Glance 安装 ssh controller2 ;yum install -y openstack-glance python-glance; ssh controller3 ;yum install -y openstack-glance python-glance; #同步controller1配置到其它节点(用scp会改变属性) rsync -avzP -e ;ssh -p 22; /etc/glance/* controller2:/etc/glance/ rsync -avzP -e ;ssh -p 22; /etc/glance/* controller3:/etc/glance/ rsync -avzP -e ;ssh -p 22; /etc/haproxy/haproxy.cfg controller2:/etc/haproxy/ rsync -avzP -e ;ssh -p 22; /etc/haproxy/haproxy.cfg controller3:/etc/haproxy/ #更改配置 ssh controller2 ;sed -i ;1,10s/controller1/controller2/; /etc/glance/glance-api.conf /etc/glance/glance-registry.conf; ssh controller3 ;sed -i ;1,10s/controller1/controller3/; /etc/glance/glance-api.conf /etc/glance/glance-registry.conf; #启动服 ssh controller2 ;systemctl enable openstack-glance-api openstack-glance-registry; ssh controller2 ;systemctl restart openstack-glance-api openstack-glance-registry; ssh controller3 ;systemctl enable openstack-glance-api openstack-glance-registry; ssh controller3 ;systemctl restart openstack-glance-api openstack-glance-registry; 11. Nova Controller Cluser集群搭建

#Nova控制节点集群#########以下在controller1执行 #创建Nova数据库、用户、认证;前面已设置 source ./admin-openstack.sh || { echo ;加载前面设置的admin-openstack.sh环境变量脚本;;exit; } # keystone上服务注册 ,创建nova用户、服务、API # nova用户前面已建 openstack service create --name nova --description ;OpenStack Compute; compute openstack endpoint create --region RegionOne compute public http://myvip:8774/v2.1 openstack endpoint create --region RegionOne compute internal http://myvip:8774/v2.1 openstack endpoint create --region RegionOne compute admin http://myvip:8774/v2.1 #创建placement用户、服务、API openstack user create --domain default --password=placement placement openstack role add --project service --user placement admin openstack service create --name placement --description ;Placement API; placement openstack endpoint create --region RegionOne placement public http://myvip:8778 openstack endpoint create --region RegionOne placement internal http://myvip:8778 openstack endpoint create --region RegionOne placement admin http://myvip:8778 ## 安装nova控制节点 yum install -y openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-placement-api # cp /etc/nova/nova.conf{,.bak} # #nova控制节点配置 echo ;# [DEFAULT] my_ip = controller1 use_neutron = True osapi_compute_listen = controller1 osapi_compute_listen_port = 8774 metadata_listen = controller1 metadata_listen_port=8775 firewall_driver = nova.virt.firewall.NoopFirewallDriver enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:openstack;myvip:5673 [api_database] connection = mysql;pymysql://nova:nova;myvip/nova_api [database] connection = mysql;pymysql://nova:nova;myvip/nova [api] auth_strategy = keystone [keystone_authtoken] auth_uri = http://myvip:5000 auth_url = http://myvip:35357 memcached_servers = controller1:11211,controller2:11211,controller2:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova [vnc] enabled = true vncserver_listen = $my_ip vncserver_proxyclient_address = $my_ip novncproxy_host=$my_ip novncproxy_port=6080 [glance] api_servers = http://myvip:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://myvip:35357/v3 username = placement password = placement [scheduler] discover_hosts_in_cells_interval = 300 [cache] enabled = true backend = oslo_cache.memcache_pool memcache_servers = controller1:11211,controller2:11211,controller3:11211 #;>/etc/nova/nova.conf echo ; #Placement API /usr/bin> >= 2.4> Require all granted </IfVersion> < 2.4> Order allow,deny Allow from all </IfVersion> </Directory> ;>>/etc/httpd/conf.d/00-nova-placement-api.conf systemctl restart httpd #同步数据库 su -s /bin/sh -c ;nova-manage api_db sync; nova su -s /bin/sh -c ;nova-manage cell_v2 map_cell0; nova su -s /bin/sh -c ;nova-manage cell_v2 create_cell --name=cell1 --verbose; nova su -s /bin/sh -c ;nova-manage db sync; nova #检测数据 cell_v2 list_cells mysql -h controller -u nova -pnova -e ;use nova_api;show tables;; mysql -h controller -u nova -pnova -e ;use nova;show tables;; mysql -h controller -u nova -pnova -e ;use nova_cell0;show tables;; #更改默认端口8774;8778给集群VIP使用 #sed -i ;s/8774/9774/; /etc/nova/nova.conf sed -i ;s/8778/9778/; /etc/httpd/conf.d/00-nova-placement-api.conf; systemctl restart httpd #haproxy高可用配置 echo ; ##nova_compute listen nova_compute_api_cluster bind controller:8774 balance source option tcpka option httpchk option tcplog server controller1 controller1:9774 check inter 2000 rise 2 fall 5 server controller2 controller2:9774 check inter 2000 rise 2 fall 5 server controller3 controller3:9774 check inter 2000 rise 2 fall 5 #Nova-api-metadata listen Nova-api-metadata_cluster bind controller:8775 balance source option tcpka option httpchk option tcplog server controller1 controller1:9775 check inter 2000 rise 2 fall 5 server controller2 controller2:9775 check inter 2000 rise 2 fall 5 server controller3 controller3:9775 check inter 2000 rise 2 fall 5 #nova_placement listen nova_placement_cluster bind controller:8778 balance source option tcpka option tcplog server controller1 controller1:9778 check inter 2000 rise 2 fall 5 server controller2 controller2:9778 check inter 2000 rise 2 fall 5 server controller3 controller3:9778 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg #开机自启动 systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-novncproxy.service #启动服务 systemctl start openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-novncproxy.service #查看节点 #nova service-list openstack catalog list upgrade check openstack compute service list #########在controller23节点安装配置 # 安装nova控制节点 yum install -y openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-placement-api #同步controller1配置并修改 rsync -avzP -e ;ssh -p 22; controller1:/etc/nova/* /etc/nova/ rsync -avzP -e ;ssh -p 22; controller1:/etc/httpd/conf.d/00-nova-placement-api.conf /etc/httpd/conf.d/ rsync -avzP -e ;ssh -p 22; controller1:/etc/haproxy/* /etc/haproxy/ sed -i ;1,9s/controller1/controller2/; /etc/nova/nova.conf #开机自启动,启动服务nova服务 ;同上 #重启服务 systemctl restart httpd haproxy #########controller23节点安装配置 #同controller2一样;只是替换不同 sed -i ;s/controller1/controller3/; /etc/nova/nova.conf 12. Neutron Controller Cluser集群搭建

#Neutron控制节点集群#本实例网络配置方式是;公共网络(flat) #官方参考 https://docs.openstack.org/neutron/pike/install/controller-install-rdo.html #创建Neutron数据库、用户认证;前面已设置 ############以下全部在controller1执行 source ./admin-openstack.sh # 创建Neutron服务实体,API端点 openstack service create --name neutron --description ;OpenStack Networking; network openstack endpoint create --region RegionOne network public http://myvip:9696 openstack endpoint create --region RegionOne network internal http://myvip:9696 openstack endpoint create --region RegionOne network admin http://myvip:9696 #安装 yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge python-neutronclient ebtables ipset #Neutron 备份配置 cp /etc/neutron/neutron.conf{,.bak2} cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak} ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak} cp /etc/neutron/dhcp_agent.ini{,.bak} cp /etc/neutron/metadata_agent.ini{,.bak} cp /etc/neutron/l3_agent.ini{,.bak} Netname=ens37 #网卡名称 #配置 echo ; # [neutron] url = http://myvip:9696 auth_url = http://myvip:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = neutron service_metadata_proxy = true metadata_proxy_shared_secret = metadata #;>>/etc/nova/nova.conf # echo ; [DEFAULT] nova_metadata_ip = myvip metadata_proxy_shared_secret = metadata #;>/etc/neutron/metadata_agent.ini # echo ;# [ml2] tenant_network_types = type_drivers = vlan,flat mechanism_drivers = linuxbridge extension_drivers = port_security [ml2_type_flat] flat_networks = provider [securitygroup] enable_ipset = True #vlan # [ml2_type_valn] # network_vlan_ranges = provider:3001:4000 #;>/etc/neutron/plugins/ml2/ml2_conf.ini # provider:网卡名 echo ;# [linux_bridge] physical_interface_mappings = provider:;$Netname; [vxlan] enable_vxlan = false #local_ip = 10.2.1.20 #l2_population = true [agent] prevent_arp_spoofing = True [securitygroup] firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver enable_security_group = True #;>/etc/neutron/plugins/ml2/linuxbridge_agent.ini # echo ;# [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.DNSmasq enable_isolated_metadata = true #;>/etc/neutron/dhcp_agent.ini # echo ; [DEFAULT] bind_port = 9696 bind_host = controller1 core_plugin = ml2 service_plugins = #service_plugins = trunk #service_plugins = router allow_overlapping_ips = true transport_url = rabbit://openstack:openstack;myvip auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] auth_uri = http://myvip:5000 auth_url = http://myvip:35357 memcached_servers = controller1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = neutron [nova] auth_url = http://myvip:35357 auth_plugin = password project_domain_id = default user_domain_id = default region_name = RegionOne project_name = service username = nova password = nova [database] connection = mysql://neutron:neutron;myvip:3306/neutron [oslo_concurrency] lock_path = /var/lib/neutron/tmp #;>/etc/neutron/neutron.conf # echo ; [DEFAULT] interface_driver = linuxbridge #;>/etc/neutron/l3_agent.ini # #同步数据库 su -s /bin/sh -c ;neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head; neutron #检测数据 mysql -h controller -u neutron -pneutron -e ;use neutron;show tables;; #haproxy高可用配置 echo ; #Neutron_API listen Neutron_API_cluster bind myvip:9696 balance source option tcpka option tcplog server controller1 controller1:9696 check inter 2000 rise 2 fall 5 server controller2 controller2:9696 check inter 2000 rise 2 fall 5 server controller3 controller3:9696 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg systemctl restart haproxy.service netstat -antp|grep haproxy #重启相关服务 systemctl restart openstack-nova-api.service #启动neutron systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service # openstack network agent list # ############在controller2安装配置############ #安装 yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge python-neutronclient ebtables ipset #同步controller1配置并修改 Node=controller2 rsync -avzP -e ;ssh -p 22; controller1:/etc/nova/* /etc/nova/ rsync -avzP -e ;ssh -p 22; controller1:/etc/neutron/* /etc/neutron/ #sed -i ;s/controller1/;$Node;/; /etc/nova/nova.conf sed -i ;s/controller1/;$Node;/; /etc/neutron/neutron.conf rsync -avzP -e ;ssh -p 22; controller1:/etc/haproxy/* /etc/haproxy/ #重启相关服务 systemctl restart haproxy openstack-nova-api.service #启动neutron systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service ########controller3安装配置,同上 13. Dashboard Controller Cluser集群搭建

#Dashboard集群#####在controller1安装配置 #安装 yum install openstack-dashboard -y #配置 cp /etc/openstack-dashboard/local_settings{,.bak} #egrep -v ;#|^$; /etc/openstack-dashboard/local_settings #显示默认配置 Setfiles=/etc/openstack-dashboard/local_settings sed -i ;s#_member_#user#g; $Setfiles sed -i ;s#OPENSTACK_HOST = ;127.0.0.1;#OPENSTACK_HOST = ;controller;#; $Setfiles ##允许所有主机访问# sed -i ;/ALLOWED_HOSTS/cALLOWED_HOSTS = [;*;, ]; $Setfiles #去掉memcached注释# sed -in ;153,158s/#//; $Setfiles sed -in ;160,164s/.*/#&/; $Setfiles sed -i ;s#UTC#Asia/Shanghai#g; $Setfiles sed -i ;s#%s:5000/v2.0#%s:5000/v3#; $Setfiles sed -i ;/ULTIDOMAIN_SUPPORT/cOPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True; $Setfiles sed -i ;s;^#OPENSTACK_KEYSTONE_DEFAULT;OPENSTACK_KEYSTONE_DEFAULT;; $Setfiles echo ; #set OPENSTACK_API_VERSIONS = { : 3, : 2, : 2, } #;>>$Setfiles # systemctl restart httpd #haproxy高可用配置 echo ; #Dashboard listen Dashboard_cluster bind myvip:80 balance source option tcpka option tcplog server controller1 controller1:80 check inter 2000 rise 2 fall 5 server controller2 controller2:80 check inter 2000 rise 2 fall 5 server controller3 controller3:80 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg systemctl restart haproxy.service netstat -antp|grep haproxy #访问 # http://myvip/dashboard/ #########其它节点配置controller2;controller3 #同步controller1配置到其它节点 rsync -avzP -e ;ssh -p 22; /etc/openstack-dashboard/local_settings controller2:/etc/openstack-dashboard/ rsync -avzP -e ;ssh -p 22; /etc/openstack-dashboard/local_settings controller3:/etc/openstack-dashboard/ #重启http ssh controller2 ;systemctl restart httpd; ssh controller3 ;systemctl restart httpd; 14. Cinder Controller Cluser集群搭建

#在控制节点安装配置cender api服务#存储节点安装配置cinder-volume服务;后续 #控制节点安装配置cinder-api、cinder-scheduler服务 ###################################################### #以下在controller节点安装配置 #创建Nova数据库、用户 #mysql -u root -p create database cinder; grant all privileges on cinder.* to ;cinder;;;localhost; identified by ;cinder;; grant all privileges on cinder.* to ;cinder;;% identified by ;cinder;; flush privileges;exit; # keystone创建cinder用户、服务、API source ./admin-openstack.sh openstack user create --domain default --password=cinder cinder openstack role add --project service --user cinder admin openstack service create --name cinderv2 --description ;OpenStack Block Storage; volumev2 openstack service create --name cinderv3 --description ;OpenStack Block Storage; volumev3 openstack endpoint create --region RegionOne volumev2 public http://myvip:8776/v2/%(project_id)s openstack endpoint create --region RegionOne volumev2 internal http://myvip:8776/v2/%(project_id)s openstack endpoint create --region RegionOne volumev2 admin http://myvip:8776/v2/%(project_id)s openstack endpoint create --region RegionOne volumev3 public http://myvip:8776/v3/%(project_id)s openstack endpoint create --region RegionOne volumev3 internal http://myvip:8776/v3/%(project_id)s openstack endpoint create --region RegionOne volumev3 admin http://myvip:8776/v3/%(project_id)s ###################################################### #安装Cinder yum install openstack-cinder -y yum install nfs-utils -y #NFS cp /etc/cinder/cinder.conf{,.bak} #配置 echo ; [DEFAULT] osapi_volume_listen = controller1 osapi_volume_listen_port = 8776 auth_strategy = keystone log_dir = /var/log/cinder state_path = /var/lib/cinder glance_api_servers = http://myvip:9292 transport_url = rabbit://openstack:openstack;myvip [database] connection = mysql;pymysql://cinder:cinder;myvip/cinder [keystone_authtoken] auth_uri = http://myvip:5000 auth_url = http://myvip:35357 memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = cinder [oslo_concurrency] lock_path = /var/lib/cinder/tmp ;>/etc/cinder/cinder.conf #nova echo ; [cinder] os_region_name = RegionOne ;>>/etc/nova/nova.conf #初始化数据 su -s /bin/sh -c ;cinder-manage db sync; cinder mysql -h controller -u cinder -pcinder -e ;use cinder;show tables;; #检测 #haproxy高可用配置 echo ; #Cinder_API_cluster listen Cinder_API_cluster bind myvip:8776 #balance source option tcpka option httpchk option tcplog server controller1 controller1:8776 check inter 2000 rise 2 fall 5 server controller2 controller2:8776 check inter 2000 rise 2 fall 5 server controller3 controller3:8776 check inter 2000 rise 2 fall 5 ;>>/etc/haproxy/haproxy.cfg systemctl restart haproxy.service netstat -antp|grep haproxy #启动服务 systemctl restart openstack-nova-api.service systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service netstat -antp|grep 8776 #cheack ##查看到存储节点;lvm、;nfs 且up状态说明配置成功 cinder service-list # ;------------------;-------------;------;---------;-------; # | Binary | Host | Zone | Status | State | # ;------------------;-------------;------;---------;-------; # | cinder-volume | cinder1;lvm | nova | enabled | up | # | cinder-volume | cinder1;nfs | nova | enabled | up | # ;------------------;-------------;------;---------;-------; #openstack volume service list #cheack cinder server #systemctl restart openstack-cinder-api openstack-cinder-scheduler # cinder-manage service remove <binary> <host> # cinder-manage service remove cinder-scheduler cinder1 #创建云硬盘类型,关联volum #LVM #(backend_name与配置文件名对应) cinder type-create lvm cinder type-key lvm set volume_backend_name=lvm01 #NFS cinder type-create nfs cinder type-key nfs set volume_backend_name=nfs01 #cheack cinder extra-specs-list #cinder type-list #cinder type-delete nfs #创建云盘(容量单位G) openstack volume create --size 1 --type lvm disk01 #lvm类型 openstack volume create --size 1 --type nfs disk02 #nfs类型 openstack volume list ###################################################### #集群节点controller2安装配置Cinder #安装 yum install openstack-cinder -y yum install nfs-utils -y #NFS cp /etc/cinder/cinder.conf{,.bak} #nova echo ; [cinder] os_region_name = RegionOne ;>>/etc/nova/nova.conf #同步controller1配置并修改 Node=controller2 rsync -avzP -e ;ssh -p 22; controller1:/etc/cinder/cinder.conf /etc/cinder/ rsync -avzP -e ;ssh -p 22; controller1:/etc/haproxy/* /etc/haproxy/ sed -i ;1,8s/controller1/;$Node;/; /etc/cinder/cinder.conf #启动服务 systemctl restart openstack-nova-api.service systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service netstat -antp|grep 8775 #cheack #节点controller3同上 ###################################################### 15. Cinder volume 节点安装

#cinder存储节点#cinder后端采用lvm、nfs安装配置 #cinder块存储 #需要准备存储节点,可以使用LVM、NFS、分布式存储等 #本次安装以LVM、NFS为例 ###################################################### #基本配置 # hostname cinder01 # hostnamectl set-hostname cinder1 #hosts设置 ############## #添加硬盘…… #fdisk快速分区,新建2个30G分区 echo -e ;n p 1 ;30G | fdisk /dev/sdb echo -e ;n p 2 ;30G | fdisk /dev/sdb #格式化 /dev/sdb1 /dev/sdb2 mkdir -p /date mount -t ext4 /dev/sdb1 /date df -h|grep /dev/sdb1 #开机挂载磁盘 echo ;mount -t ext4 /dev/sdb1 /date; >>/etc/rc.d/rc.local tail -1 /etc/rc.d/rc.local chmod ;x /etc/rc.d/rc.local ############## #安装配置LVM;作为后端存储使用 yum install -y lvm2 systemctl enable lvm2-lvmetad.service systemctl start lvm2-lvmetad.service #创建LVM物理卷pv与卷组vg pvcreate /dev/sdb2 vgcreate cinder_lvm01 /dev/sdb2 vgdisplay #查看vg ############## #安装配置NFS服务;作为后端存储使用 yum install nfs-utils rpcbind -y mkdir -p /date/{cinder_nfs1,cinder_nfs2} chown cinder:cinder /date/cinder_nfs1 chmod 777 /date/cinder_nfs1 #echo ;/date/cinder_nfs1 *(rw,no_root_squash,sync);>/etc/exports echo ;/date/cinder_nfs1 *(rw,root_squash,sync,anonuid=165,anongid=165);>/etc/exports exportfs -r systemctl enable rpcbind nfs-server systemctl restart rpcbind nfs-server showmount -e localhost ###################################################### #安装配置Cinder yum install -y openstack-cinder targetcli python-keystone lvm2 cp /etc/cinder/cinder.conf{,.bak} cp /etc/lvm/lvm.conf{,.bak} #配置LVM过滤;只接收上面配置的lvm设备/dev/sdb2 #在devices { }部分添加 filter = [ ;a/sdb2/;, ;r/.*/;] sed -i ;141a filter = [ ;a/sdb2/;, ;r/.*/;]; /etc/lvm/lvm.conf #在141行后添加 #NFS echo ;192.168.58.24:/date/cinder_nfs1;>/etc/cinder/nfs_shares chmod 640 /etc/cinder/nfs_shares chown root:cinder /etc/cinder/nfs_shares #Cinder配置 echo ; [DEFAULT] auth_strategy = keystone log_dir = /var/log/cinder state_path = /var/lib/cinder glance_api_servers = http://myvip:9292 transport_url = rabbit://openstack:openstack;myvip enabled_backends = lvm,nfs [database] connection = mysql;pymysql://cinder:cinder;myvip/cinder [keystone_authtoken] auth_uri = http://myvip:5000 auth_url = http://myvip:35357 memcached_servers = controller01:11211,controller02:11211,controller03:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = cinder [oslo_concurrency] lock_path = /var/lib/cinder/tmp [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver iscsi_helper = lioadm iscsi_protocol = iscsi volume_group = cinder_lvm01 iscsi_ip_address = 172.27.10.41 volumes_dir = $state_path/volumes volume_backend_name = lvm01 [nfs] volume_driver = cinder.volume.drivers.nfs.NfsDriver nfs_shares_config = /etc/cinder/nfs_shares nfs_mount_point_base = $state_path/mnt volume_backend_name = nfs01 ;>/etc/cinder/cinder.conf chmod 640 /etc/cinder/cinder.conf chgrp cinder /etc/cinder/cinder.conf #启动Cinder卷服务 systemctl enable openstack-cinder-volume.service target.service systemctl start openstack-cinder-volume.service target.service 3、openstack集群搭建

1. 关闭selinux、防火墙

systemctl stop firewalld.servicesystemctl disable firewalld.service --state sed -i ;/^SELINUX=.*/c SELINUX=disabled; /etc/selinux/config sed -i ;s/^SELINUXTYPE=.*/SELINUXTYPE=disabled/g; /etc/selinux/config grep --color=auto ;^SELINUX; /etc/selinux/config setenforce 0 2. 时间同步;设置hostname

每个节点分别设置;

yum install -y ntp

systemctl enable ntpd && systemctl restart ntpd timedatectl set-timezone Asia/Shanghai /usr/sbin/ntpdate ntp6.aliyun.com echo ;*/3 * * * * /usr/sbin/ntpdate ntp6.aliyun.com &> /dev/null; > /tmp/crontab crontab /tmp/crontab hostnamectl --static set-hostname ops$(ip addr |grep brd |grep global |head -n1 |cut -d ;/; -f1 |cut -d ;.; -f4) 添加hosts;

cat >>/etc/hosts <<EOF192.168.0.171 ops171 192.168.0.172 ops172 EOF [ ;grep -c ; controller$; /etc/hosts ; -eq 0 ] && echo ;192.168.0.173 v.meilele.com controller; /etc/hosts tail /etc/hosts 3. yum源

echo ;

[centos-openstack-liberty] name=CentOS-7 - OpenStack liberty baseurl=http://vault.centos.org/centos/7.3.1611/cloud/x86_64/openstack-liberty/ gpgcheck=0 enabled=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-Centos-7 ; >/etc/yum.repos.d/CentOS-OpenStack-liberty.repo tail /etc/yum.repos.d/CentOS-OpenStack-liberty.repo ########### yum install -y qemu-kvm libvirt virt-install systemctl enable libvirtd && systemctl restart libvirtd 4. 免密码认证

wget -q http://indoor.meilele.com/download/centos/script/sshkey_tool.sh -O sshkey_tool.sh bash sshkey_tool.sh 192.168.0.171 root ess.com1 bash sshkey_tool.sh 192.168.0.172 root ess.com1 5. http高可用;负载均衡pacemaker;haproxy

所有控制节点安装Pacemake Corosync;